Compare commits

1 Commits

version/20

...

web/fix-cs

| Author | SHA1 | Date | |

|---|---|---|---|

| 403184ad73 |

@ -1,5 +1,5 @@

|

||||

[bumpversion]

|

||||

current_version = 2024.6.0-rc1

|

||||

current_version = 2024.2.2

|

||||

tag = True

|

||||

commit = True

|

||||

parse = (?P<major>\d+)\.(?P<minor>\d+)\.(?P<patch>\d+)(?:-(?P<rc_t>[a-zA-Z-]+)(?P<rc_n>[1-9]\\d*))?

|

||||

@ -21,8 +21,6 @@ optional_value = final

|

||||

|

||||

[bumpversion:file:schema.yml]

|

||||

|

||||

[bumpversion:file:blueprints/schema.json]

|

||||

|

||||

[bumpversion:file:authentik/__init__.py]

|

||||

|

||||

[bumpversion:file:internal/constants/constants.go]

|

||||

|

||||

2

.github/FUNDING.yml

vendored

2

.github/FUNDING.yml

vendored

@ -1 +1 @@

|

||||

custom: https://goauthentik.io/pricing/

|

||||

github: [BeryJu]

|

||||

|

||||

@ -12,7 +12,7 @@ should_build = str(os.environ.get("DOCKER_USERNAME", None) is not None).lower()

|

||||

branch_name = os.environ["GITHUB_REF"]

|

||||

if os.environ.get("GITHUB_HEAD_REF", "") != "":

|

||||

branch_name = os.environ["GITHUB_HEAD_REF"]

|

||||

safe_branch_name = branch_name.replace("refs/heads/", "").replace("/", "-").replace("'", "-")

|

||||

safe_branch_name = branch_name.replace("refs/heads/", "").replace("/", "-")

|

||||

|

||||

image_names = os.getenv("IMAGE_NAME").split(",")

|

||||

image_arch = os.getenv("IMAGE_ARCH") or None

|

||||

@ -54,9 +54,9 @@ image_main_tag = image_tags[0]

|

||||

image_tags_rendered = ",".join(image_tags)

|

||||

|

||||

with open(os.environ["GITHUB_OUTPUT"], "a+", encoding="utf-8") as _output:

|

||||

print(f"shouldBuild={should_build}", file=_output)

|

||||

print(f"sha={sha}", file=_output)

|

||||

print(f"version={version}", file=_output)

|

||||

print(f"prerelease={prerelease}", file=_output)

|

||||

print(f"imageTags={image_tags_rendered}", file=_output)

|

||||

print(f"imageMainTag={image_main_tag}", file=_output)

|

||||

print("shouldBuild=%s" % should_build, file=_output)

|

||||

print("sha=%s" % sha, file=_output)

|

||||

print("version=%s" % version, file=_output)

|

||||

print("prerelease=%s" % prerelease, file=_output)

|

||||

print("imageTags=%s" % image_tags_rendered, file=_output)

|

||||

print("imageMainTag=%s" % image_main_tag, file=_output)

|

||||

|

||||

8

.github/actions/setup/action.yml

vendored

8

.github/actions/setup/action.yml

vendored

@ -16,25 +16,25 @@ runs:

|

||||

sudo apt-get update

|

||||

sudo apt-get install --no-install-recommends -y libpq-dev openssl libxmlsec1-dev pkg-config gettext

|

||||

- name: Setup python and restore poetry

|

||||

uses: actions/setup-python@v5

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version-file: "pyproject.toml"

|

||||

cache: "poetry"

|

||||

- name: Setup node

|

||||

uses: actions/setup-node@v4

|

||||

uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version-file: web/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: web/package-lock.json

|

||||

- name: Setup go

|

||||

uses: actions/setup-go@v5

|

||||

uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version-file: "go.mod"

|

||||

- name: Setup dependencies

|

||||

shell: bash

|

||||

run: |

|

||||

export PSQL_TAG=${{ inputs.postgresql_version }}

|

||||

docker compose -f .github/actions/setup/docker-compose.yml up -d

|

||||

docker-compose -f .github/actions/setup/docker-compose.yml up -d

|

||||

poetry install

|

||||

cd web && npm ci

|

||||

- name: Generate config

|

||||

|

||||

2

.github/actions/setup/docker-compose.yml

vendored

2

.github/actions/setup/docker-compose.yml

vendored

@ -1,3 +1,5 @@

|

||||

version: "3.7"

|

||||

|

||||

services:

|

||||

postgresql:

|

||||

image: docker.io/library/postgres:${PSQL_TAG:-16}

|

||||

|

||||

1

.github/codespell-words.txt

vendored

1

.github/codespell-words.txt

vendored

@ -4,4 +4,3 @@ hass

|

||||

warmup

|

||||

ontext

|

||||

singed

|

||||

assertIn

|

||||

|

||||

65

.github/workflows/api-py-publish.yml

vendored

65

.github/workflows/api-py-publish.yml

vendored

@ -1,65 +0,0 @@

|

||||

name: authentik-api-py-publish

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

paths:

|

||||

- "schema.yml"

|

||||

workflow_dispatch:

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

id-token: write

|

||||

steps:

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

app_id: ${{ secrets.GH_APP_ID }}

|

||||

private_key: ${{ secrets.GH_APP_PRIVATE_KEY }}

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

- name: Install poetry & deps

|

||||

shell: bash

|

||||

run: |

|

||||

pipx install poetry || true

|

||||

sudo apt-get update

|

||||

sudo apt-get install --no-install-recommends -y libpq-dev openssl libxmlsec1-dev pkg-config gettext

|

||||

- name: Setup python and restore poetry

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version-file: "pyproject.toml"

|

||||

cache: "poetry"

|

||||

- name: Generate API Client

|

||||

run: make gen-client-py

|

||||

- name: Publish package

|

||||

working-directory: gen-py-api/

|

||||

run: |

|

||||

poetry build

|

||||

- name: Publish package to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

with:

|

||||

packages-dir: gen-py-api/dist/

|

||||

# We can't easily upgrade the API client being used due to poetry being poetry

|

||||

# so we'll have to rely on dependabot

|

||||

# - name: Upgrade /

|

||||

# run: |

|

||||

# export VERSION=$(cd gen-py-api && poetry version -s)

|

||||

# poetry add "authentik_client=$VERSION" --allow-prereleases --lock

|

||||

# - uses: peter-evans/create-pull-request@v6

|

||||

# id: cpr

|

||||

# with:

|

||||

# token: ${{ steps.generate_token.outputs.token }}

|

||||

# branch: update-root-api-client

|

||||

# commit-message: "root: bump API Client version"

|

||||

# title: "root: bump API Client version"

|

||||

# body: "root: bump API Client version"

|

||||

# delete-branch: true

|

||||

# signoff: true

|

||||

# # ID from https://api.github.com/users/authentik-automation[bot]

|

||||

# author: authentik-automation[bot] <135050075+authentik-automation[bot]@users.noreply.github.com>

|

||||

# - uses: peter-evans/enable-pull-request-automerge@v3

|

||||

# with:

|

||||

# token: ${{ steps.generate_token.outputs.token }}

|

||||

# pull-request-number: ${{ steps.cpr.outputs.pull-request-number }}

|

||||

# merge-method: squash

|

||||

14

.github/workflows/ci-main.yml

vendored

14

.github/workflows/ci-main.yml

vendored

@ -7,6 +7,8 @@ on:

|

||||

- main

|

||||

- next

|

||||

- version-*

|

||||

paths-ignore:

|

||||

- website/**

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

@ -50,6 +52,7 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

psql:

|

||||

- 12-alpine

|

||||

- 15-alpine

|

||||

- 16-alpine

|

||||

steps:

|

||||

@ -103,6 +106,7 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

psql:

|

||||

- 12-alpine

|

||||

- 15-alpine

|

||||

- 16-alpine

|

||||

steps:

|

||||

@ -128,7 +132,7 @@ jobs:

|

||||

- name: Setup authentik env

|

||||

uses: ./.github/actions/setup

|

||||

- name: Create k8s Kind Cluster

|

||||

uses: helm/kind-action@v1.10.0

|

||||

uses: helm/kind-action@v1.9.0

|

||||

- name: run integration

|

||||

run: |

|

||||

poetry run coverage run manage.py test tests/integration

|

||||

@ -158,8 +162,6 @@ jobs:

|

||||

glob: tests/e2e/test_provider_ldap* tests/e2e/test_source_ldap*

|

||||

- name: radius

|

||||

glob: tests/e2e/test_provider_radius*

|

||||

- name: scim

|

||||

glob: tests/e2e/test_source_scim*

|

||||

- name: flows

|

||||

glob: tests/e2e/test_flows*

|

||||

steps:

|

||||

@ -168,7 +170,7 @@ jobs:

|

||||

uses: ./.github/actions/setup

|

||||

- name: Setup e2e env (chrome, etc)

|

||||

run: |

|

||||

docker compose -f tests/e2e/docker-compose.yml up -d

|

||||

docker-compose -f tests/e2e/docker-compose.yml up -d

|

||||

- id: cache-web

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

@ -250,8 +252,8 @@ jobs:

|

||||

push: ${{ steps.ev.outputs.shouldBuild == 'true' }}

|

||||

build-args: |

|

||||

GIT_BUILD_HASH=${{ steps.ev.outputs.sha }}

|

||||

cache-from: type=registry,ref=ghcr.io/goauthentik/dev-server:buildcache

|

||||

cache-to: type=registry,ref=ghcr.io/goauthentik/dev-server:buildcache,mode=max

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

platforms: linux/${{ matrix.arch }}

|

||||

pr-comment:

|

||||

needs:

|

||||

|

||||

6

.github/workflows/ci-outpost.yml

vendored

6

.github/workflows/ci-outpost.yml

vendored

@ -29,7 +29,7 @@ jobs:

|

||||

- name: Generate API

|

||||

run: make gen-client-go

|

||||

- name: golangci-lint

|

||||

uses: golangci/golangci-lint-action@v6

|

||||

uses: golangci/golangci-lint-action@v4

|

||||

with:

|

||||

version: v1.54.2

|

||||

args: --timeout 5000s --verbose

|

||||

@ -105,8 +105,8 @@ jobs:

|

||||

GIT_BUILD_HASH=${{ steps.ev.outputs.sha }}

|

||||

platforms: linux/amd64,linux/arm64

|

||||

context: .

|

||||

cache-from: type=registry,ref=ghcr.io/goauthentik/dev-${{ matrix.type }}:buildcache

|

||||

cache-to: type=registry,ref=ghcr.io/goauthentik/dev-${{ matrix.type }}:buildcache,mode=max

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

build-binary:

|

||||

timeout-minutes: 120

|

||||

needs:

|

||||

|

||||

8

.github/workflows/ci-web.yml

vendored

8

.github/workflows/ci-web.yml

vendored

@ -34,13 +34,6 @@ jobs:

|

||||

- name: Eslint

|

||||

working-directory: ${{ matrix.project }}/

|

||||

run: npm run lint

|

||||

lint-lockfile:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- working-directory: web/

|

||||

run: |

|

||||

[ -z "$(jq -r '.packages | to_entries[] | select((.key | startswith("node_modules")) and (.value | has("resolved") | not)) | .key' < package-lock.json)" ]

|

||||

lint-build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

@ -102,7 +95,6 @@ jobs:

|

||||

run: npm run lit-analyse

|

||||

ci-web-mark:

|

||||

needs:

|

||||

- lint-lockfile

|

||||

- lint-eslint

|

||||

- lint-prettier

|

||||

- lint-lit-analyse

|

||||

|

||||

8

.github/workflows/ci-website.yml

vendored

8

.github/workflows/ci-website.yml

vendored

@ -12,13 +12,6 @@ on:

|

||||

- version-*

|

||||

|

||||

jobs:

|

||||

lint-lockfile:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- working-directory: website/

|

||||

run: |

|

||||

[ -z "$(jq -r '.packages | to_entries[] | select((.key | startswith("node_modules")) and (.value | has("resolved") | not)) | .key' < package-lock.json)" ]

|

||||

lint-prettier:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

@ -69,7 +62,6 @@ jobs:

|

||||

run: npm run ${{ matrix.job }}

|

||||

ci-website-mark:

|

||||

needs:

|

||||

- lint-lockfile

|

||||

- lint-prettier

|

||||

- test

|

||||

- build

|

||||

|

||||

43

.github/workflows/gen-update-webauthn-mds.yml

vendored

43

.github/workflows/gen-update-webauthn-mds.yml

vendored

@ -1,43 +0,0 @@

|

||||

name: authentik-gen-update-webauthn-mds

|

||||

on:

|

||||

workflow_dispatch:

|

||||

schedule:

|

||||

- cron: '30 1 1,15 * *'

|

||||

|

||||

env:

|

||||

POSTGRES_DB: authentik

|

||||

POSTGRES_USER: authentik

|

||||

POSTGRES_PASSWORD: "EK-5jnKfjrGRm<77"

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

app_id: ${{ secrets.GH_APP_ID }}

|

||||

private_key: ${{ secrets.GH_APP_PRIVATE_KEY }}

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

- name: Setup authentik env

|

||||

uses: ./.github/actions/setup

|

||||

- run: poetry run ak update_webauthn_mds

|

||||

- uses: peter-evans/create-pull-request@v6

|

||||

id: cpr

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

branch: update-fido-mds-client

|

||||

commit-message: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

title: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

body: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

delete-branch: true

|

||||

signoff: true

|

||||

# ID from https://api.github.com/users/authentik-automation[bot]

|

||||

author: authentik-automation[bot] <135050075+authentik-automation[bot]@users.noreply.github.com>

|

||||

- uses: peter-evans/enable-pull-request-automerge@v3

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

pull-request-number: ${{ steps.cpr.outputs.pull-request-number }}

|

||||

merge-method: squash

|

||||

12

.github/workflows/release-publish.yml

vendored

12

.github/workflows/release-publish.yml

vendored

@ -155,12 +155,12 @@ jobs:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Run test suite in final docker images

|

||||

run: |

|

||||

echo "PG_PASS=$(openssl rand 32 | base64)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand 32 | base64)" >> .env

|

||||

docker compose pull -q

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker-compose pull -q

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

sentry-release:

|

||||

needs:

|

||||

- build-server

|

||||

|

||||

10

.github/workflows/release-tag.yml

vendored

10

.github/workflows/release-tag.yml

vendored

@ -14,16 +14,16 @@ jobs:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Pre-release test

|

||||

run: |

|

||||

echo "PG_PASS=$(openssl rand 32 | base64)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand 32 | base64)" >> .env

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker buildx install

|

||||

mkdir -p ./gen-ts-api

|

||||

docker build -t testing:latest .

|

||||

echo "AUTHENTIK_IMAGE=testing" >> .env

|

||||

echo "AUTHENTIK_TAG=latest" >> .env

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

|

||||

2

.github/workflows/repo-stale.yml

vendored

2

.github/workflows/repo-stale.yml

vendored

@ -23,7 +23,7 @@ jobs:

|

||||

repo-token: ${{ steps.generate_token.outputs.token }}

|

||||

days-before-stale: 60

|

||||

days-before-close: 7

|

||||

exempt-issue-labels: pinned,security,pr_wanted,enhancement,bug/confirmed,enhancement/confirmed,question,status/reviewing

|

||||

exempt-issue-labels: pinned,security,pr_wanted,enhancement,bug/confirmed,enhancement/confirmed,question

|

||||

stale-issue-label: wontfix

|

||||

stale-issue-message: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

name: authentik-api-ts-publish

|

||||

name: authentik-web-api-publish

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

13

.vscode/settings.json

vendored

13

.vscode/settings.json

vendored

@ -4,21 +4,20 @@

|

||||

"asgi",

|

||||

"authentik",

|

||||

"authn",

|

||||

"entra",

|

||||

"goauthentik",

|

||||

"jwks",

|

||||

"kubernetes",

|

||||

"oidc",

|

||||

"openid",

|

||||

"passwordless",

|

||||

"plex",

|

||||

"saml",

|

||||

"scim",

|

||||

"slo",

|

||||

"sso",

|

||||

"totp",

|

||||

"traefik",

|

||||

"webauthn",

|

||||

"traefik",

|

||||

"passwordless",

|

||||

"kubernetes",

|

||||

"sso",

|

||||

"slo",

|

||||

"scim",

|

||||

],

|

||||

"todo-tree.tree.showCountsInTree": true,

|

||||

"todo-tree.tree.showBadges": true,

|

||||

|

||||

38

Dockerfile

38

Dockerfile

@ -1,7 +1,7 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

# Stage 1: Build website

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:22 as website-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:21 as website-builder

|

||||

|

||||

ENV NODE_ENV=production

|

||||

|

||||

@ -20,7 +20,7 @@ COPY ./SECURITY.md /work/

|

||||

RUN npm run build-bundled

|

||||

|

||||

# Stage 2: Build webui

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:22 as web-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:21 as web-builder

|

||||

|

||||

ENV NODE_ENV=production

|

||||

|

||||

@ -38,7 +38,7 @@ COPY ./gen-ts-api /work/web/node_modules/@goauthentik/api

|

||||

RUN npm run build

|

||||

|

||||

# Stage 3: Build go proxy

|

||||

FROM --platform=${BUILDPLATFORM} mcr.microsoft.com/oss/go/microsoft/golang:1.22-fips-bookworm AS go-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/golang:1.22.1-bookworm AS go-builder

|

||||

|

||||

ARG TARGETOS

|

||||

ARG TARGETARCH

|

||||

@ -49,11 +49,6 @@ ARG GOARCH=$TARGETARCH

|

||||

|

||||

WORKDIR /go/src/goauthentik.io

|

||||

|

||||

RUN --mount=type=cache,id=apt-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/var/cache/apt \

|

||||

dpkg --add-architecture arm64 && \

|

||||

apt-get update && \

|

||||

apt-get install -y --no-install-recommends crossbuild-essential-arm64 gcc-aarch64-linux-gnu

|

||||

|

||||

RUN --mount=type=bind,target=/go/src/goauthentik.io/go.mod,src=./go.mod \

|

||||

--mount=type=bind,target=/go/src/goauthentik.io/go.sum,src=./go.sum \

|

||||

--mount=type=cache,target=/go/pkg/mod \

|

||||

@ -68,17 +63,17 @@ COPY ./internal /go/src/goauthentik.io/internal

|

||||

COPY ./go.mod /go/src/goauthentik.io/go.mod

|

||||

COPY ./go.sum /go/src/goauthentik.io/go.sum

|

||||

|

||||

ENV CGO_ENABLED=0

|

||||

|

||||

RUN --mount=type=cache,sharing=locked,target=/go/pkg/mod \

|

||||

--mount=type=cache,id=go-build-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/root/.cache/go-build \

|

||||

if [ "$TARGETARCH" = "arm64" ]; then export CC=aarch64-linux-gnu-gcc && export CC_FOR_TARGET=gcc-aarch64-linux-gnu; fi && \

|

||||

CGO_ENABLED=1 GOEXPERIMENT="systemcrypto" GOFLAGS="-tags=requirefips" GOARM="${TARGETVARIANT#v}" \

|

||||

go build -o /go/authentik ./cmd/server

|

||||

GOARM="${TARGETVARIANT#v}" go build -o /go/authentik ./cmd/server

|

||||

|

||||

# Stage 4: MaxMind GeoIP

|

||||

FROM --platform=${BUILDPLATFORM} ghcr.io/maxmind/geoipupdate:v7.0.1 as geoip

|

||||

FROM --platform=${BUILDPLATFORM} ghcr.io/maxmind/geoipupdate:v6.1 as geoip

|

||||

|

||||

ENV GEOIPUPDATE_EDITION_IDS="GeoLite2-City GeoLite2-ASN"

|

||||

ENV GEOIPUPDATE_VERBOSE="1"

|

||||

ENV GEOIPUPDATE_VERBOSE="true"

|

||||

ENV GEOIPUPDATE_ACCOUNT_ID_FILE="/run/secrets/GEOIPUPDATE_ACCOUNT_ID"

|

||||

ENV GEOIPUPDATE_LICENSE_KEY_FILE="/run/secrets/GEOIPUPDATE_LICENSE_KEY"

|

||||

|

||||

@ -89,7 +84,7 @@ RUN --mount=type=secret,id=GEOIPUPDATE_ACCOUNT_ID \

|

||||

/bin/sh -c "/usr/bin/entry.sh || echo 'Failed to get GeoIP database, disabling'; exit 0"

|

||||

|

||||

# Stage 5: Python dependencies

|

||||

FROM ghcr.io/goauthentik/fips-python:3.12.3-slim-bookworm-fips-full AS python-deps

|

||||

FROM docker.io/python:3.12.2-slim-bookworm AS python-deps

|

||||

|

||||

WORKDIR /ak-root/poetry

|

||||

|

||||

@ -102,7 +97,7 @@ RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloa

|

||||

RUN --mount=type=cache,id=apt-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/var/cache/apt \

|

||||

apt-get update && \

|

||||

# Required for installing pip packages

|

||||

apt-get install -y --no-install-recommends build-essential pkg-config libpq-dev

|

||||

apt-get install -y --no-install-recommends build-essential pkg-config libxmlsec1-dev zlib1g-dev libpq-dev

|

||||

|

||||

RUN --mount=type=bind,target=./pyproject.toml,src=./pyproject.toml \

|

||||

--mount=type=bind,target=./poetry.lock,src=./poetry.lock \

|

||||

@ -110,13 +105,12 @@ RUN --mount=type=bind,target=./pyproject.toml,src=./pyproject.toml \

|

||||

--mount=type=cache,target=/root/.cache/pypoetry \

|

||||

python -m venv /ak-root/venv/ && \

|

||||

bash -c "source ${VENV_PATH}/bin/activate && \

|

||||

pip3 install --upgrade pip && \

|

||||

pip3 install poetry && \

|

||||

poetry install --only=main --no-ansi --no-interaction --no-root && \

|

||||

pip install --force-reinstall /wheels/*"

|

||||

pip3 install --upgrade pip && \

|

||||

pip3 install poetry && \

|

||||

poetry install --only=main --no-ansi --no-interaction --no-root"

|

||||

|

||||

# Stage 6: Run

|

||||

FROM ghcr.io/goauthentik/fips-python:3.12.3-slim-bookworm-fips-full AS final-image

|

||||

FROM docker.io/python:3.12.2-slim-bookworm AS final-image

|

||||

|

||||

ARG GIT_BUILD_HASH

|

||||

ARG VERSION

|

||||

@ -133,7 +127,7 @@ WORKDIR /

|

||||

# We cannot cache this layer otherwise we'll end up with a bigger image

|

||||

RUN apt-get update && \

|

||||

# Required for runtime

|

||||

apt-get install -y --no-install-recommends libpq5 libmaxminddb0 ca-certificates && \

|

||||

apt-get install -y --no-install-recommends libpq5 openssl libxmlsec1-openssl libmaxminddb0 ca-certificates && \

|

||||

# Required for bootstrap & healtcheck

|

||||

apt-get install -y --no-install-recommends runit && \

|

||||

apt-get clean && \

|

||||

@ -169,8 +163,6 @@ ENV TMPDIR=/dev/shm/ \

|

||||

VENV_PATH="/ak-root/venv" \

|

||||

POETRY_VIRTUALENVS_CREATE=false

|

||||

|

||||

ENV GOFIPS=1

|

||||

|

||||

HEALTHCHECK --interval=30s --timeout=30s --start-period=60s --retries=3 CMD [ "ak", "healthcheck" ]

|

||||

|

||||

ENTRYPOINT [ "dumb-init", "--", "ak" ]

|

||||

|

||||

36

Makefile

36

Makefile

@ -9,7 +9,6 @@ PY_SOURCES = authentik tests scripts lifecycle .github

|

||||

DOCKER_IMAGE ?= "authentik:test"

|

||||

|

||||

GEN_API_TS = "gen-ts-api"

|

||||

GEN_API_PY = "gen-py-api"

|

||||

GEN_API_GO = "gen-go-api"

|

||||

|

||||

pg_user := $(shell python -m authentik.lib.config postgresql.user 2>/dev/null)

|

||||

@ -19,7 +18,6 @@ pg_name := $(shell python -m authentik.lib.config postgresql.name 2>/dev/null)

|

||||

CODESPELL_ARGS = -D - -D .github/codespell-dictionary.txt \

|

||||

-I .github/codespell-words.txt \

|

||||

-S 'web/src/locales/**' \

|

||||

-S 'website/developer-docs/api/reference/**' \

|

||||

authentik \

|

||||

internal \

|

||||

cmd \

|

||||

@ -47,12 +45,12 @@ test-go:

|

||||

go test -timeout 0 -v -race -cover ./...

|

||||

|

||||

test-docker: ## Run all tests in a docker-compose

|

||||

echo "PG_PASS=$(shell openssl rand 32 | base64)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(shell openssl rand 32 | base64)" >> .env

|

||||

docker compose pull -q

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker-compose pull -q

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

rm -f .env

|

||||

|

||||

test: ## Run the server tests and produce a coverage report (locally)

|

||||

@ -66,7 +64,7 @@ lint-fix: ## Lint and automatically fix errors in the python source code. Repor

|

||||

codespell -w $(CODESPELL_ARGS)

|

||||

|

||||

lint: ## Lint the python and golang sources

|

||||

bandit -r $(PY_SOURCES) -x web/node_modules -x tests/wdio/node_modules -x website/node_modules

|

||||

bandit -r $(PY_SOURCES) -x node_modules

|

||||

golangci-lint run -v

|

||||

|

||||

core-install:

|

||||

@ -139,10 +137,7 @@ gen-clean-ts: ## Remove generated API client for Typescript

|

||||

gen-clean-go: ## Remove generated API client for Go

|

||||

rm -rf ./${GEN_API_GO}/

|

||||

|

||||

gen-clean-py: ## Remove generated API client for Python

|

||||

rm -rf ./${GEN_API_PY}/

|

||||

|

||||

gen-clean: gen-clean-ts gen-clean-go gen-clean-py ## Remove generated API clients

|

||||

gen-clean: gen-clean-ts gen-clean-go ## Remove generated API clients

|

||||

|

||||

gen-client-ts: gen-clean-ts ## Build and install the authentik API for Typescript into the authentik UI Application

|

||||

docker run \

|

||||

@ -160,20 +155,6 @@ gen-client-ts: gen-clean-ts ## Build and install the authentik API for Typescri

|

||||

cd ./${GEN_API_TS} && npm i

|

||||

\cp -rf ./${GEN_API_TS}/* web/node_modules/@goauthentik/api

|

||||

|

||||

gen-client-py: gen-clean-py ## Build and install the authentik API for Python

|

||||

docker run \

|

||||

--rm -v ${PWD}:/local \

|

||||

--user ${UID}:${GID} \

|

||||

docker.io/openapitools/openapi-generator-cli:v7.4.0 generate \

|

||||

-i /local/schema.yml \

|

||||

-g python \

|

||||

-o /local/${GEN_API_PY} \

|

||||

-c /local/scripts/api-py-config.yaml \

|

||||

--additional-properties=packageVersion=${NPM_VERSION} \

|

||||

--git-repo-id authentik \

|

||||

--git-user-id goauthentik

|

||||

pip install ./${GEN_API_PY}

|

||||

|

||||

gen-client-go: gen-clean-go ## Build and install the authentik API for Golang

|

||||

mkdir -p ./${GEN_API_GO} ./${GEN_API_GO}/templates

|

||||

wget https://raw.githubusercontent.com/goauthentik/client-go/main/config.yaml -O ./${GEN_API_GO}/config.yaml

|

||||

@ -253,7 +234,6 @@ website-watch: ## Build and watch the documentation website, updating automatic

|

||||

#########################

|

||||

|

||||

docker: ## Build a docker image of the current source tree

|

||||

mkdir -p ${GEN_API_TS}

|

||||

DOCKER_BUILDKIT=1 docker build . --progress plain --tag ${DOCKER_IMAGE}

|

||||

|

||||

#########################

|

||||

|

||||

@ -25,10 +25,10 @@ For bigger setups, there is a Helm Chart [here](https://github.com/goauthentik/h

|

||||

|

||||

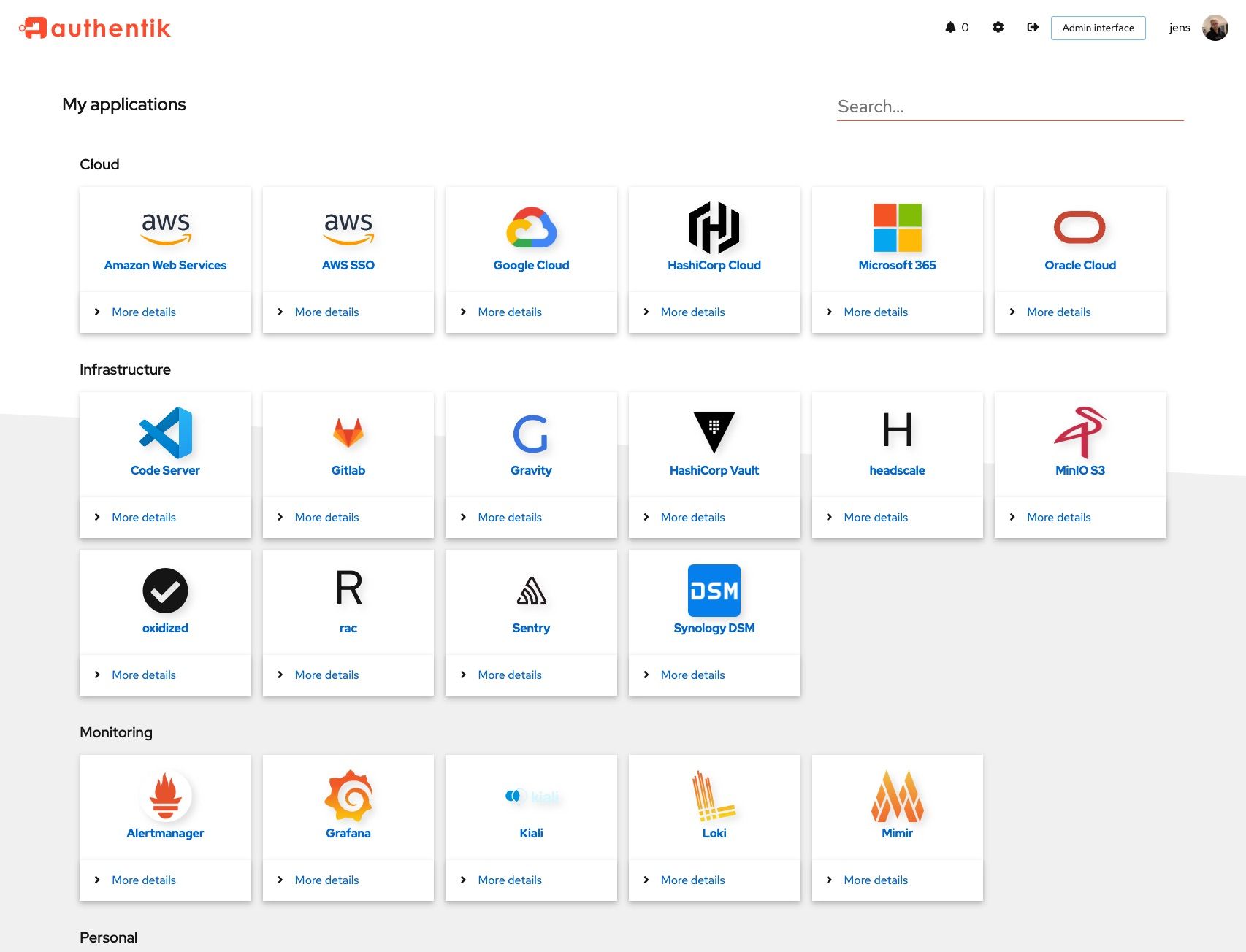

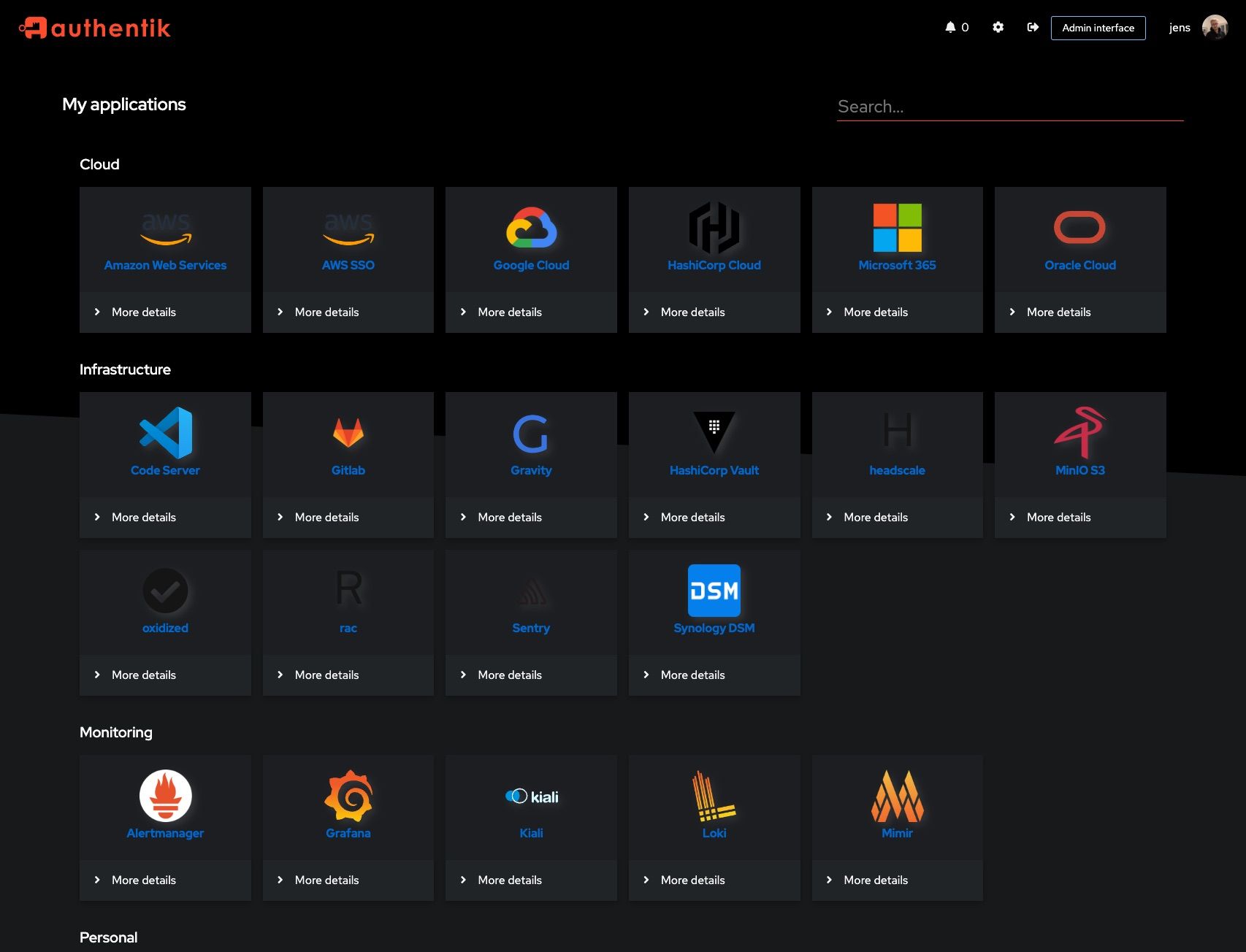

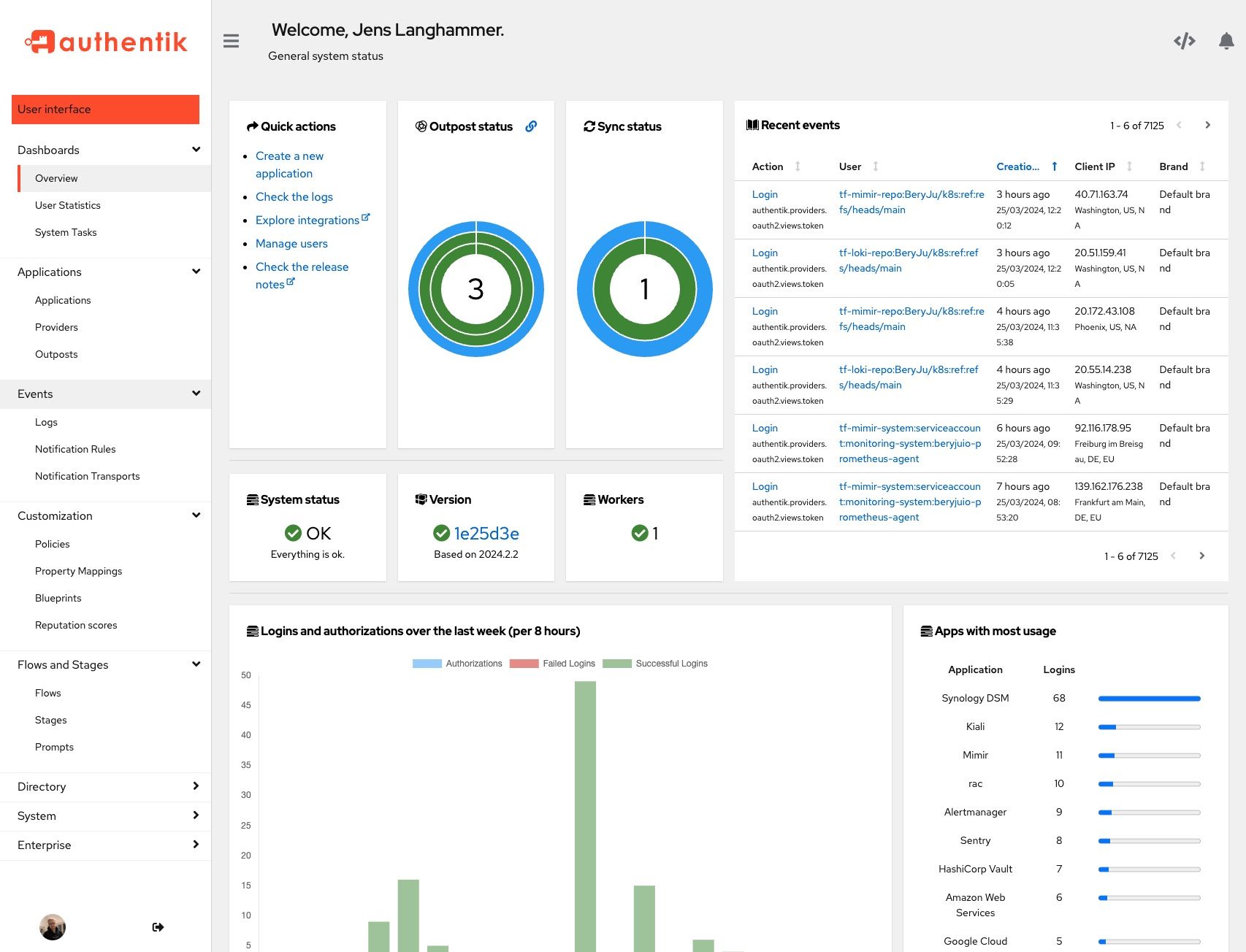

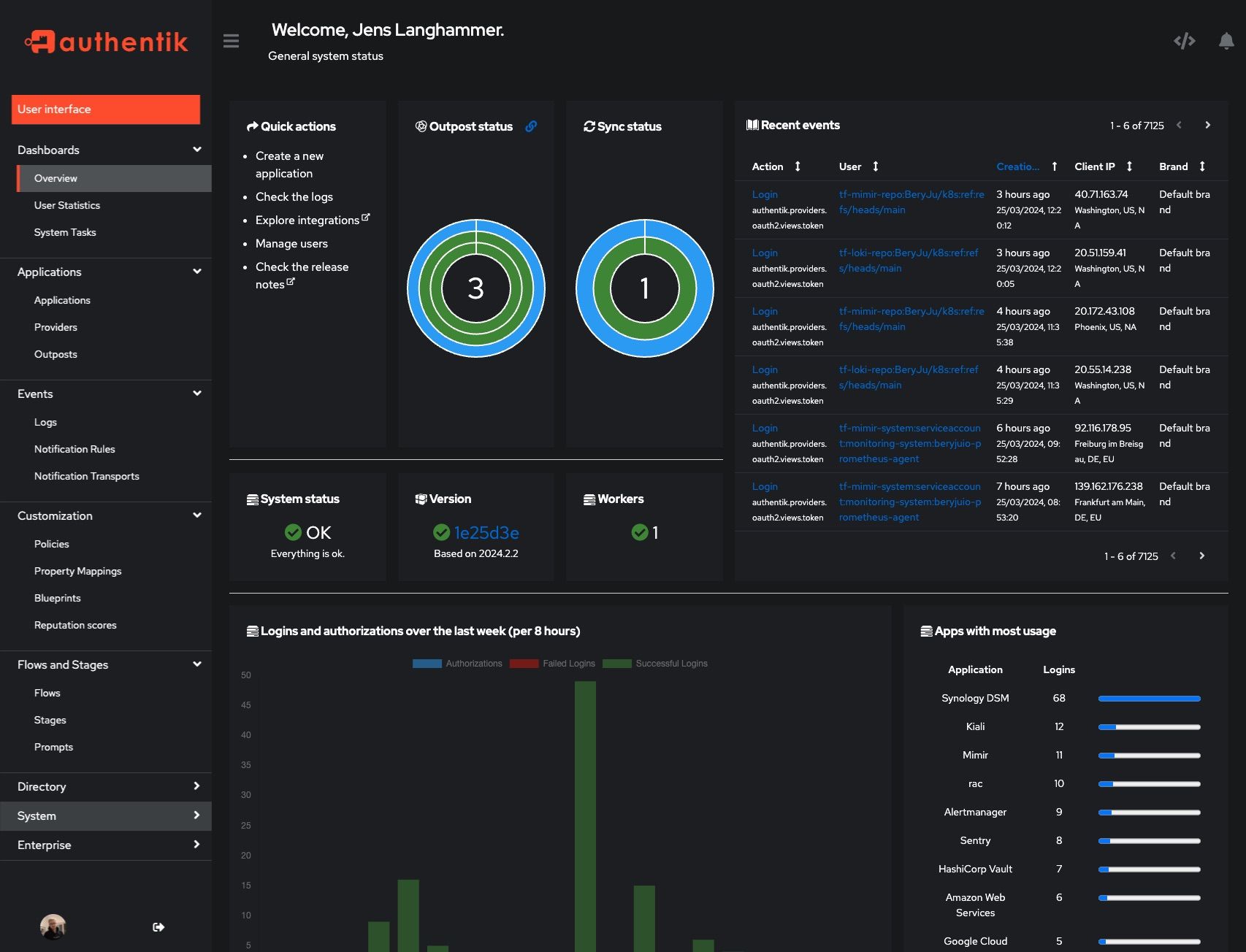

## Screenshots

|

||||

|

||||

| Light | Dark |

|

||||

| ----------------------------------------------------------- | ---------------------------------------------------------- |

|

||||

|  |  |

|

||||

|  |  |

|

||||

| Light | Dark |

|

||||

| ------------------------------------------------------ | ----------------------------------------------------- |

|

||||

|  |  |

|

||||

|  |  |

|

||||

|

||||

## Development

|

||||

|

||||

|

||||

20

SECURITY.md

20

SECURITY.md

@ -18,10 +18,10 @@ Even if the issue is not a CVE, we still greatly appreciate your help in hardeni

|

||||

|

||||

(.x being the latest patch release for each version)

|

||||

|

||||

| Version | Supported |

|

||||

| --------- | --------- |

|

||||

| 2023.10.x | ✅ |

|

||||

| 2024.2.x | ✅ |

|

||||

| Version | Supported |

|

||||

| --- | --- |

|

||||

| 2023.6.x | ✅ |

|

||||

| 2023.8.x | ✅ |

|

||||

|

||||

## Reporting a Vulnerability

|

||||

|

||||

@ -31,12 +31,12 @@ To report a vulnerability, send an email to [security@goauthentik.io](mailto:se

|

||||

|

||||

authentik reserves the right to reclassify CVSS as necessary. To determine severity, we will use the CVSS calculator from NVD (https://nvd.nist.gov/vuln-metrics/cvss/v3-calculator). The calculated CVSS score will then be translated into one of the following categories:

|

||||

|

||||

| Score | Severity |

|

||||

| ---------- | -------- |

|

||||

| 0.0 | None |

|

||||

| 0.1 – 3.9 | Low |

|

||||

| 4.0 – 6.9 | Medium |

|

||||

| 7.0 – 8.9 | High |

|

||||

| Score | Severity |

|

||||

| --- | --- |

|

||||

| 0.0 | None |

|

||||

| 0.1 – 3.9 | Low |

|

||||

| 4.0 – 6.9 | Medium |

|

||||

| 7.0 – 8.9 | High |

|

||||

| 9.0 – 10.0 | Critical |

|

||||

|

||||

## Disclosure process

|

||||

|

||||

@ -2,7 +2,7 @@

|

||||

|

||||

from os import environ

|

||||

|

||||

__version__ = "2024.6.0"

|

||||

__version__ = "2024.2.2"

|

||||

ENV_GIT_HASH_KEY = "GIT_BUILD_HASH"

|

||||

|

||||

|

||||

|

||||

@ -2,21 +2,18 @@

|

||||

|

||||

import platform

|

||||

from datetime import datetime

|

||||

from ssl import OPENSSL_VERSION

|

||||

from sys import version as python_version

|

||||

from typing import TypedDict

|

||||

|

||||

from cryptography.hazmat.backends.openssl.backend import backend

|

||||

from django.utils.timezone import now

|

||||

from drf_spectacular.utils import extend_schema

|

||||

from gunicorn import version_info as gunicorn_version

|

||||

from rest_framework.fields import SerializerMethodField

|

||||

from rest_framework.request import Request

|

||||

from rest_framework.response import Response

|

||||

from rest_framework.views import APIView

|

||||

|

||||

from authentik import get_full_version

|

||||

from authentik.core.api.utils import PassiveSerializer

|

||||

from authentik.enterprise.license import LicenseKey

|

||||

from authentik.lib.config import CONFIG

|

||||

from authentik.lib.utils.reflection import get_env

|

||||

from authentik.outposts.apps import MANAGED_OUTPOST

|

||||

@ -28,13 +25,11 @@ class RuntimeDict(TypedDict):

|

||||

"""Runtime information"""

|

||||

|

||||

python_version: str

|

||||

gunicorn_version: str

|

||||

environment: str

|

||||

architecture: str

|

||||

platform: str

|

||||

uname: str

|

||||

openssl_version: str

|

||||

openssl_fips_enabled: bool | None

|

||||

authentik_version: str

|

||||

|

||||

|

||||

class SystemInfoSerializer(PassiveSerializer):

|

||||

@ -69,15 +64,11 @@ class SystemInfoSerializer(PassiveSerializer):

|

||||

def get_runtime(self, request: Request) -> RuntimeDict:

|

||||

"""Get versions"""

|

||||

return {

|

||||

"architecture": platform.machine(),

|

||||

"authentik_version": get_full_version(),

|

||||

"environment": get_env(),

|

||||

"openssl_fips_enabled": (

|

||||

backend._fips_enabled if LicenseKey.get_total().is_valid() else None

|

||||

),

|

||||

"openssl_version": OPENSSL_VERSION,

|

||||

"platform": platform.platform(),

|

||||

"python_version": python_version,

|

||||

"gunicorn_version": ".".join(str(x) for x in gunicorn_version),

|

||||

"environment": get_env(),

|

||||

"architecture": platform.machine(),

|

||||

"platform": platform.platform(),

|

||||

"uname": " ".join(platform.uname()),

|

||||

}

|

||||

|

||||

|

||||

@ -10,7 +10,7 @@ from rest_framework.response import Response

|

||||

from rest_framework.views import APIView

|

||||

|

||||

from authentik import __version__, get_build_hash

|

||||

from authentik.admin.tasks import VERSION_CACHE_KEY, VERSION_NULL, update_latest_version

|

||||

from authentik.admin.tasks import VERSION_CACHE_KEY, update_latest_version

|

||||

from authentik.core.api.utils import PassiveSerializer

|

||||

|

||||

|

||||

@ -19,7 +19,6 @@ class VersionSerializer(PassiveSerializer):

|

||||

|

||||

version_current = SerializerMethodField()

|

||||

version_latest = SerializerMethodField()

|

||||

version_latest_valid = SerializerMethodField()

|

||||

build_hash = SerializerMethodField()

|

||||

outdated = SerializerMethodField()

|

||||

|

||||

@ -39,10 +38,6 @@ class VersionSerializer(PassiveSerializer):

|

||||

return __version__

|

||||

return version_in_cache

|

||||

|

||||

def get_version_latest_valid(self, _) -> bool:

|

||||

"""Check if latest version is valid"""

|

||||

return cache.get(VERSION_CACHE_KEY) != VERSION_NULL

|

||||

|

||||

def get_outdated(self, instance) -> bool:

|

||||

"""Check if we're running the latest version"""

|

||||

return parse(self.get_version_current(instance)) < parse(self.get_version_latest(instance))

|

||||

|

||||

@ -18,7 +18,6 @@ from authentik.lib.utils.http import get_http_session

|

||||

from authentik.root.celery import CELERY_APP

|

||||

|

||||

LOGGER = get_logger()

|

||||

VERSION_NULL = "0.0.0"

|

||||

VERSION_CACHE_KEY = "authentik_latest_version"

|

||||

VERSION_CACHE_TIMEOUT = 8 * 60 * 60 # 8 hours

|

||||

# Chop of the first ^ because we want to search the entire string

|

||||

@ -56,7 +55,7 @@ def clear_update_notifications():

|

||||

def update_latest_version(self: SystemTask):

|

||||

"""Update latest version info"""

|

||||

if CONFIG.get_bool("disable_update_check"):

|

||||

cache.set(VERSION_CACHE_KEY, VERSION_NULL, VERSION_CACHE_TIMEOUT)

|

||||

cache.set(VERSION_CACHE_KEY, "0.0.0", VERSION_CACHE_TIMEOUT)

|

||||

self.set_status(TaskStatus.WARNING, "Version check disabled.")

|

||||

return

|

||||

try:

|

||||

@ -83,7 +82,7 @@ def update_latest_version(self: SystemTask):

|

||||

event_dict["message"] = f"Changelog: {match.group()}"

|

||||

Event.new(EventAction.UPDATE_AVAILABLE, **event_dict).save()

|

||||

except (RequestException, IndexError) as exc:

|

||||

cache.set(VERSION_CACHE_KEY, VERSION_NULL, VERSION_CACHE_TIMEOUT)

|

||||

cache.set(VERSION_CACHE_KEY, "0.0.0", VERSION_CACHE_TIMEOUT)

|

||||

self.set_error(exc)

|

||||

|

||||

|

||||

|

||||

@ -10,3 +10,26 @@ class AuthentikAPIConfig(AppConfig):

|

||||

label = "authentik_api"

|

||||

mountpoint = "api/"

|

||||

verbose_name = "authentik API"

|

||||

|

||||

def ready(self) -> None:

|

||||

from drf_spectacular.extensions import OpenApiAuthenticationExtension

|

||||

|

||||

from authentik.api.authentication import TokenAuthentication

|

||||

|

||||

# Class is defined here as it needs to be created early enough that drf-spectacular will

|

||||

# find it, but also won't cause any import issues

|

||||

|

||||

class TokenSchema(OpenApiAuthenticationExtension):

|

||||

"""Auth schema"""

|

||||

|

||||

target_class = TokenAuthentication

|

||||

name = "authentik"

|

||||

|

||||

def get_security_definition(self, auto_schema):

|

||||

"""Auth schema"""

|

||||

return {

|

||||

"type": "apiKey",

|

||||

"in": "header",

|

||||

"name": "Authorization",

|

||||

"scheme": "bearer",

|

||||

}

|

||||

|

||||

@ -4,7 +4,6 @@ from hmac import compare_digest

|

||||

from typing import Any

|

||||

|

||||

from django.conf import settings

|

||||

from drf_spectacular.extensions import OpenApiAuthenticationExtension

|

||||

from rest_framework.authentication import BaseAuthentication, get_authorization_header

|

||||

from rest_framework.exceptions import AuthenticationFailed

|

||||

from rest_framework.request import Request

|

||||

@ -103,14 +102,3 @@ class TokenAuthentication(BaseAuthentication):

|

||||

return None

|

||||

|

||||

return (user, None) # pragma: no cover

|

||||

|

||||

|

||||

class TokenSchema(OpenApiAuthenticationExtension):

|

||||

"""Auth schema"""

|

||||

|

||||

target_class = TokenAuthentication

|

||||

name = "authentik"

|

||||

|

||||

def get_security_definition(self, auto_schema):

|

||||

"""Auth schema"""

|

||||

return {"type": "http", "scheme": "bearer"}

|

||||

|

||||

@ -12,7 +12,6 @@ from drf_spectacular.settings import spectacular_settings

|

||||

from drf_spectacular.types import OpenApiTypes

|

||||

from rest_framework.settings import api_settings

|

||||

|

||||

from authentik.api.apps import AuthentikAPIConfig

|

||||

from authentik.api.pagination import PAGINATION_COMPONENT_NAME, PAGINATION_SCHEMA

|

||||

|

||||

|

||||

@ -102,12 +101,3 @@ def postprocess_schema_responses(result, generator: SchemaGenerator, **kwargs):

|

||||

comp = result["components"]["schemas"][component]

|

||||

comp["additionalProperties"] = {}

|

||||

return result

|

||||

|

||||

|

||||

def preprocess_schema_exclude_non_api(endpoints, **kwargs):

|

||||

"""Filter out all API Views which are not mounted under /api"""

|

||||

return [

|

||||

(path, path_regex, method, callback)

|

||||

for path, path_regex, method, callback in endpoints

|

||||

if path.startswith("/" + AuthentikAPIConfig.mountpoint)

|

||||

]

|

||||

|

||||

@ -68,11 +68,7 @@ class ConfigView(APIView):

|

||||

"""Get all capabilities this server instance supports"""

|

||||

caps = []

|

||||

deb_test = settings.DEBUG or settings.TEST

|

||||

if (

|

||||

CONFIG.get("storage.media.backend", "file") == "s3"

|

||||

or Path(settings.STORAGES["default"]["OPTIONS"]["location"]).is_mount()

|

||||

or deb_test

|

||||

):

|

||||

if Path(settings.MEDIA_ROOT).is_mount() or deb_test:

|

||||

caps.append(Capabilities.CAN_SAVE_MEDIA)

|

||||

for processor in get_context_processors():

|

||||

if cap := processor.capability():

|

||||

|

||||

@ -8,8 +8,6 @@ from django.apps import AppConfig

|

||||

from django.db import DatabaseError, InternalError, ProgrammingError

|

||||

from structlog.stdlib import BoundLogger, get_logger

|

||||

|

||||

from authentik.root.signals import startup

|

||||

|

||||

|

||||

class ManagedAppConfig(AppConfig):

|

||||

"""Basic reconciliation logic for apps"""

|

||||

@ -25,12 +23,9 @@ class ManagedAppConfig(AppConfig):

|

||||

|

||||

def ready(self) -> None:

|

||||

self.import_related()

|

||||

startup.connect(self._on_startup_callback, dispatch_uid=self.label)

|

||||

return super().ready()

|

||||

|

||||

def _on_startup_callback(self, sender, **_):

|

||||

self._reconcile_global()

|

||||

self._reconcile_tenant()

|

||||

return super().ready()

|

||||

|

||||

def import_related(self):

|

||||

"""Automatically import related modules which rely on just being imported

|

||||

|

||||

@ -4,14 +4,12 @@ from json import dumps

|

||||

from typing import Any

|

||||

|

||||

from django.core.management.base import BaseCommand, no_translations

|

||||

from django.db.models import Model, fields

|

||||

from drf_jsonschema_serializer.convert import converter, field_to_converter

|

||||

from django.db.models import Model

|

||||

from drf_jsonschema_serializer.convert import field_to_converter

|

||||

from rest_framework.fields import Field, JSONField, UUIDField

|

||||

from rest_framework.relations import PrimaryKeyRelatedField

|

||||

from rest_framework.serializers import Serializer

|

||||

from structlog.stdlib import get_logger

|

||||

|

||||

from authentik import __version__

|

||||

from authentik.blueprints.v1.common import BlueprintEntryDesiredState

|

||||

from authentik.blueprints.v1.importer import SERIALIZER_CONTEXT_BLUEPRINT, is_model_allowed

|

||||

from authentik.blueprints.v1.meta.registry import BaseMetaModel, registry

|

||||

@ -20,23 +18,6 @@ from authentik.lib.models import SerializerModel

|

||||

LOGGER = get_logger()

|

||||

|

||||

|

||||

@converter

|

||||

class PrimaryKeyRelatedFieldConverter:

|

||||

"""Custom primary key field converter which is aware of non-integer based PKs

|

||||

|

||||

This is not an exhaustive fix for other non-int PKs, however in authentik we either

|

||||

use UUIDs or ints"""

|

||||

|

||||

field_class = PrimaryKeyRelatedField

|

||||

|

||||

def convert(self, field: PrimaryKeyRelatedField):

|

||||

model: Model = field.queryset.model

|

||||

pk_field = model._meta.pk

|

||||

if isinstance(pk_field, fields.UUIDField):

|

||||

return {"type": "string", "format": "uuid"}

|

||||

return {"type": "integer"}

|

||||

|

||||

|

||||

class Command(BaseCommand):

|

||||

"""Generate JSON Schema for blueprints"""

|

||||

|

||||

@ -48,7 +29,7 @@ class Command(BaseCommand):

|

||||

"$schema": "http://json-schema.org/draft-07/schema",

|

||||

"$id": "https://goauthentik.io/blueprints/schema.json",

|

||||

"type": "object",

|

||||

"title": f"authentik {__version__} Blueprint schema",

|

||||

"title": "authentik Blueprint schema",

|

||||

"required": ["version", "entries"],

|

||||

"properties": {

|

||||

"version": {

|

||||

|

||||

@ -39,7 +39,7 @@ def reconcile_app(app_name: str):

|

||||

def wrapper(*args, **kwargs):

|

||||

config = apps.get_app_config(app_name)

|

||||

if isinstance(config, ManagedAppConfig):

|

||||

config._on_startup_callback(None)

|

||||

config.ready()

|

||||

return func(*args, **kwargs)

|

||||

|

||||

return wrapper

|

||||

|

||||

@ -75,7 +75,7 @@ class BlueprintEntry:

|

||||

_state: BlueprintEntryState = field(default_factory=BlueprintEntryState)

|

||||

|

||||

def __post_init__(self, *args, **kwargs) -> None:

|

||||

self.__tag_contexts: list[YAMLTagContext] = []

|

||||

self.__tag_contexts: list["YAMLTagContext"] = []

|

||||

|

||||

@staticmethod

|

||||

def from_model(model: SerializerModel, *extra_identifier_names: str) -> "BlueprintEntry":

|

||||

@ -556,11 +556,7 @@ class BlueprintDumper(SafeDumper):

|

||||

|

||||

def factory(items):

|

||||

final_dict = dict(items)

|

||||

# Remove internal state variables

|

||||

final_dict.pop("_state", None)

|

||||

# Future-proof to only remove the ID if we don't set a value

|

||||

if "id" in final_dict and final_dict.get("id") is None:

|

||||

final_dict.pop("id")

|

||||

return final_dict

|

||||

|

||||

data = asdict(data, dict_factory=factory)

|

||||

|

||||

@ -19,6 +19,8 @@ from guardian.models import UserObjectPermission

|

||||

from rest_framework.exceptions import ValidationError

|

||||

from rest_framework.serializers import BaseSerializer, Serializer

|

||||

from structlog.stdlib import BoundLogger, get_logger

|

||||

from structlog.testing import capture_logs

|

||||

from structlog.types import EventDict

|

||||

from yaml import load

|

||||

|

||||

from authentik.blueprints.v1.common import (

|

||||

@ -39,16 +41,7 @@ from authentik.core.models import (

|

||||

)

|

||||

from authentik.enterprise.license import LicenseKey

|

||||

from authentik.enterprise.models import LicenseUsage

|

||||

from authentik.enterprise.providers.google_workspace.models import (

|

||||

GoogleWorkspaceProviderGroup,

|

||||

GoogleWorkspaceProviderUser,

|

||||

)

|

||||

from authentik.enterprise.providers.microsoft_entra.models import (

|

||||

MicrosoftEntraProviderGroup,

|

||||

MicrosoftEntraProviderUser,

|

||||

)

|

||||

from authentik.enterprise.providers.rac.models import ConnectionToken

|

||||

from authentik.events.logs import LogEvent, capture_logs

|

||||

from authentik.events.models import SystemTask

|

||||

from authentik.events.utils import cleanse_dict

|

||||

from authentik.flows.models import FlowToken, Stage

|

||||

@ -58,9 +51,7 @@ from authentik.outposts.models import OutpostServiceConnection

|

||||

from authentik.policies.models import Policy, PolicyBindingModel

|

||||

from authentik.policies.reputation.models import Reputation

|

||||

from authentik.providers.oauth2.models import AccessToken, AuthorizationCode, RefreshToken

|

||||

from authentik.providers.scim.models import SCIMProviderGroup, SCIMProviderUser

|

||||

from authentik.sources.scim.models import SCIMSourceGroup, SCIMSourceUser

|

||||

from authentik.stages.authenticator_webauthn.models import WebAuthnDeviceType

|

||||

from authentik.providers.scim.models import SCIMGroup, SCIMUser

|

||||

from authentik.tenants.models import Tenant

|

||||

|

||||

# Context set when the serializer is created in a blueprint context

|

||||

@ -94,11 +85,10 @@ def excluded_models() -> list[type[Model]]:

|

||||

# Classes that have other dependencies

|

||||

AuthenticatedSession,

|

||||

# Classes which are only internally managed

|

||||

# FIXME: these shouldn't need to be explicitly listed, but rather based off of a mixin

|

||||

FlowToken,

|

||||

LicenseUsage,

|

||||

SCIMProviderGroup,

|

||||

SCIMProviderUser,

|

||||

SCIMGroup,

|

||||

SCIMUser,

|

||||

Tenant,

|

||||

SystemTask,

|

||||

ConnectionToken,

|

||||

@ -106,13 +96,6 @@ def excluded_models() -> list[type[Model]]:

|

||||

AccessToken,

|

||||

RefreshToken,

|

||||

Reputation,

|

||||

WebAuthnDeviceType,

|

||||

SCIMSourceUser,

|

||||

SCIMSourceGroup,

|

||||

GoogleWorkspaceProviderUser,

|

||||

GoogleWorkspaceProviderGroup,

|

||||

MicrosoftEntraProviderUser,

|

||||

MicrosoftEntraProviderGroup,

|

||||

)

|

||||

|

||||

|

||||

@ -178,7 +161,7 @@ class Importer:

|

||||

|

||||

def updater(value) -> Any:

|

||||

if value in self.__pk_map:

|

||||

self.logger.debug("Updating reference in entry", value=value)

|

||||

self.logger.debug("updating reference in entry", value=value)

|

||||

return self.__pk_map[value]

|

||||

return value

|

||||

|

||||

@ -267,7 +250,7 @@ class Importer:

|

||||

model_instance = existing_models.first()

|

||||

if not isinstance(model(), BaseMetaModel) and model_instance:

|

||||

self.logger.debug(

|

||||

"Initialise serializer with instance",

|

||||

"initialise serializer with instance",

|

||||

model=model,

|

||||

instance=model_instance,

|

||||

pk=model_instance.pk,

|

||||

@ -277,14 +260,14 @@ class Importer:

|

||||

elif model_instance and entry.state == BlueprintEntryDesiredState.MUST_CREATED:

|

||||

raise EntryInvalidError.from_entry(

|

||||

(

|

||||

f"State is set to {BlueprintEntryDesiredState.MUST_CREATED} "

|

||||

f"state is set to {BlueprintEntryDesiredState.MUST_CREATED} "

|

||||

"and object exists already",

|

||||

),

|

||||

entry,

|

||||

)

|

||||

else:

|

||||

self.logger.debug(

|

||||

"Initialised new serializer instance",

|

||||

"initialised new serializer instance",

|

||||

model=model,

|

||||

**cleanse_dict(updated_identifiers),

|

||||

)

|

||||

@ -341,7 +324,7 @@ class Importer:

|

||||

model: type[SerializerModel] = registry.get_model(model_app_label, model_name)

|

||||

except LookupError:

|

||||

self.logger.warning(

|

||||

"App or Model does not exist", app=model_app_label, model=model_name

|

||||

"app or model does not exist", app=model_app_label, model=model_name

|

||||

)

|

||||

return False

|

||||

# Validate each single entry

|

||||

@ -353,7 +336,7 @@ class Importer:

|

||||

if entry.get_state(self._import) == BlueprintEntryDesiredState.ABSENT:

|

||||

serializer = exc.serializer

|

||||

else:

|

||||

self.logger.warning(f"Entry invalid: {exc}", entry=entry, error=exc)

|

||||

self.logger.warning(f"entry invalid: {exc}", entry=entry, error=exc)

|

||||

if raise_errors:

|

||||

raise exc

|

||||

return False

|

||||

@ -373,14 +356,14 @@ class Importer:

|

||||

and state == BlueprintEntryDesiredState.CREATED

|

||||

):

|

||||

self.logger.debug(

|

||||

"Instance exists, skipping",

|

||||

"instance exists, skipping",

|

||||

model=model,

|

||||

instance=instance,

|

||||

pk=instance.pk,

|

||||

)

|

||||

else:

|

||||

instance = serializer.save()

|

||||

self.logger.debug("Updated model", model=instance)

|

||||

self.logger.debug("updated model", model=instance)

|

||||

if "pk" in entry.identifiers:

|

||||

self.__pk_map[entry.identifiers["pk"]] = instance.pk

|

||||

entry._state = BlueprintEntryState(instance)

|

||||

@ -388,12 +371,12 @@ class Importer:

|

||||

instance: Model | None = serializer.instance

|

||||

if instance.pk:

|

||||

instance.delete()

|

||||

self.logger.debug("Deleted model", mode=instance)

|

||||

self.logger.debug("deleted model", mode=instance)

|

||||

continue

|

||||

self.logger.debug("Entry to delete with no instance, skipping")

|

||||

self.logger.debug("entry to delete with no instance, skipping")

|

||||

return True

|

||||

|

||||

def validate(self, raise_validation_errors=False) -> tuple[bool, list[LogEvent]]:

|

||||

def validate(self, raise_validation_errors=False) -> tuple[bool, list[EventDict]]:

|

||||

"""Validate loaded blueprint export, ensure all models are allowed

|

||||

and serializers have no errors"""

|

||||

self.logger.debug("Starting blueprint import validation")

|

||||

@ -407,7 +390,9 @@ class Importer:

|

||||

):

|

||||

successful = self._apply_models(raise_errors=raise_validation_errors)

|

||||

if not successful:

|

||||

self.logger.warning("Blueprint validation failed")

|

||||

self.logger.debug("Blueprint validation failed")

|

||||

for log in logs:

|

||||

getattr(self.logger, log.get("log_level"))(**log)

|

||||

self.logger.debug("Finished blueprint import validation")

|

||||

self._import = orig_import

|

||||

return successful, logs

|

||||

|

||||

@ -30,7 +30,6 @@ from authentik.blueprints.v1.common import BlueprintLoader, BlueprintMetadata, E

|

||||

from authentik.blueprints.v1.importer import Importer

|

||||

from authentik.blueprints.v1.labels import LABEL_AUTHENTIK_INSTANTIATE

|

||||

from authentik.blueprints.v1.oci import OCI_PREFIX

|

||||

from authentik.events.logs import capture_logs

|

||||

from authentik.events.models import TaskStatus

|

||||

from authentik.events.system_tasks import SystemTask, prefill_task

|

||||

from authentik.events.utils import sanitize_dict

|

||||

@ -212,15 +211,14 @@ def apply_blueprint(self: SystemTask, instance_pk: str):

|

||||

if not valid:

|

||||

instance.status = BlueprintInstanceStatus.ERROR

|

||||

instance.save()

|

||||

self.set_status(TaskStatus.ERROR, *logs)

|

||||

self.set_status(TaskStatus.ERROR, *[x["event"] for x in logs])

|

||||

return

|

||||

applied = importer.apply()

|

||||

if not applied:

|

||||

instance.status = BlueprintInstanceStatus.ERROR

|

||||

instance.save()

|

||||

self.set_status(TaskStatus.ERROR, "Failed to apply")

|

||||

return

|

||||

with capture_logs() as logs:

|

||||

applied = importer.apply()

|

||||

if not applied:

|

||||

instance.status = BlueprintInstanceStatus.ERROR

|

||||

instance.save()

|

||||

self.set_status(TaskStatus.ERROR, *logs)

|

||||

return

|

||||

instance.status = BlueprintInstanceStatus.SUCCESSFUL

|

||||

instance.last_applied_hash = file_hash

|

||||

instance.last_applied = now()

|

||||

|

||||

@ -1,21 +0,0 @@

|

||||

# Generated by Django 5.0.4 on 2024-04-18 18:56

|

||||

|

||||

from django.db import migrations, models

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("authentik_brands", "0005_tenantuuid_to_branduuid"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AddIndex(

|

||||

model_name="brand",

|

||||

index=models.Index(fields=["domain"], name="authentik_b_domain_b9b24a_idx"),

|

||||

),

|

||||

migrations.AddIndex(

|

||||

model_name="brand",

|

||||

index=models.Index(fields=["default"], name="authentik_b_default_3ccf12_idx"),

|

||||

),

|

||||

]

|

||||

@ -84,7 +84,3 @@ class Brand(SerializerModel):

|

||||

class Meta:

|

||||

verbose_name = _("Brand")

|

||||

verbose_name_plural = _("Brands")

|

||||

indexes = [

|

||||

models.Index(fields=["domain"]),

|

||||

models.Index(fields=["default"]),

|

||||

]

|

||||

|

||||

@ -20,15 +20,15 @@ from rest_framework.response import Response

|

||||

from rest_framework.serializers import ModelSerializer

|

||||

from rest_framework.viewsets import ModelViewSet

|

||||

from structlog.stdlib import get_logger

|

||||

from structlog.testing import capture_logs

|

||||

|

||||

from authentik.admin.api.metrics import CoordinateSerializer

|

||||

from authentik.api.pagination import Pagination

|

||||

from authentik.blueprints.v1.importer import SERIALIZER_CONTEXT_BLUEPRINT

|

||||

from authentik.core.api.providers import ProviderSerializer

|

||||

from authentik.core.api.used_by import UsedByMixin

|

||||

from authentik.core.models import Application, User

|

||||

from authentik.events.logs import LogEventSerializer, capture_logs

|

||||

from authentik.events.models import EventAction

|

||||

from authentik.events.utils import sanitize_dict

|

||||

from authentik.lib.utils.file import (

|

||||

FilePathSerializer,

|

||||

FileUploadSerializer,

|

||||

@ -37,19 +37,16 @@ from authentik.lib.utils.file import (

|

||||

)

|

||||

from authentik.policies.api.exec import PolicyTestResultSerializer

|

||||

from authentik.policies.engine import PolicyEngine

|

||||

from authentik.policies.types import CACHE_PREFIX, PolicyResult

|

||||

from authentik.policies.types import PolicyResult

|

||||

from authentik.rbac.decorators import permission_required

|

||||

from authentik.rbac.filters import ObjectFilter

|

||||

|

||||

LOGGER = get_logger()

|

||||

|

||||

|

||||

def user_app_cache_key(user_pk: str, page_number: int | None = None) -> str:

|

||||

def user_app_cache_key(user_pk: str) -> str:

|

||||

"""Cache key where application list for user is saved"""

|

||||

key = f"{CACHE_PREFIX}/app_access/{user_pk}"

|

||||

if page_number:

|

||||

key += f"/{page_number}"

|

||||

return key

|

||||

return f"goauthentik.io/core/app_access/{user_pk}"

|

||||

|

||||

|

||||

class ApplicationSerializer(ModelSerializer):

|

||||

@ -185,9 +182,9 @@ class ApplicationViewSet(UsedByMixin, ModelViewSet):

|

||||

if request.user.is_superuser:

|

||||

log_messages = []

|

||||

for log in logs:

|

||||

if log.attributes.get("process", "") == "PolicyProcess":

|

||||

if log.get("process", "") == "PolicyProcess":

|

||||

continue

|

||||

log_messages.append(LogEventSerializer(log).data)

|

||||

log_messages.append(sanitize_dict(log))

|

||||

result.log_messages = log_messages

|

||||

response = PolicyTestResultSerializer(result)

|

||||

return Response(response.data)

|

||||

@ -217,8 +214,7 @@ class ApplicationViewSet(UsedByMixin, ModelViewSet):

|

||||

return super().list(request)

|

||||

|

||||

queryset = self._filter_queryset_for_list(self.get_queryset())

|

||||

paginator: Pagination = self.paginator

|

||||

paginated_apps = paginator.paginate_queryset(queryset, request)

|

||||

pagined_apps = self.paginate_queryset(queryset)

|

||||

|

||||

if "for_user" in request.query_params:

|

||||

try:

|

||||

@ -232,22 +228,20 @@ class ApplicationViewSet(UsedByMixin, ModelViewSet):

|

||||

raise ValidationError({"for_user": "User not found"})

|

||||

except ValueError as exc:

|

||||

raise ValidationError from exc

|

||||

allowed_applications = self._get_allowed_applications(paginated_apps, user=for_user)

|

||||

allowed_applications = self._get_allowed_applications(pagined_apps, user=for_user)

|

||||

serializer = self.get_serializer(allowed_applications, many=True)

|

||||

return self.get_paginated_response(serializer.data)

|

||||

|

||||

allowed_applications = []

|

||||

if not should_cache:

|

||||

allowed_applications = self._get_allowed_applications(paginated_apps)

|

||||

allowed_applications = self._get_allowed_applications(pagined_apps)

|

||||

if should_cache:

|

||||

allowed_applications = cache.get(

|

||||

user_app_cache_key(self.request.user.pk, paginator.page.number)

|

||||

)

|

||||

allowed_applications = cache.get(user_app_cache_key(self.request.user.pk))

|

||||

if not allowed_applications:

|

||||

LOGGER.debug("Caching allowed application list", page=paginator.page.number)

|

||||

allowed_applications = self._get_allowed_applications(paginated_apps)

|

||||

LOGGER.debug("Caching allowed application list")

|

||||

allowed_applications = self._get_allowed_applications(pagined_apps)

|

||||

cache.set(

|

||||

user_app_cache_key(self.request.user.pk, paginator.page.number),

|

||||

user_app_cache_key(self.request.user.pk),

|

||||

allowed_applications,

|

||||

timeout=86400,

|

||||

)

|

||||

|

||||

@ -2,23 +2,16 @@

|

||||

|

||||

from json import loads

|

||||

|

||||

from django.db.models import Prefetch

|

||||

from django.http import Http404

|

||||

from django_filters.filters import CharFilter, ModelMultipleChoiceFilter

|

||||

from django_filters.filterset import FilterSet

|

||||

from drf_spectacular.utils import (

|

||||

OpenApiParameter,

|

||||

OpenApiResponse,

|

||||

extend_schema,

|

||||

extend_schema_field,

|

||||

)

|

||||

from drf_spectacular.utils import OpenApiResponse, extend_schema

|

||||

from guardian.shortcuts import get_objects_for_user

|

||||

from rest_framework.decorators import action

|

||||