Compare commits

24 Commits

web/add-ht

...

version/20

| Author | SHA1 | Date | |

|---|---|---|---|

| 8256f1897d | |||

| 16d321835d | |||

| f34612efe6 | |||

| e82f147130 | |||

| 0ea6ad8eea | |||

| f731443220 | |||

| b70a66cde5 | |||

| b733dbbcb0 | |||

| e34d4c0669 | |||

| 310983a4d0 | |||

| 47b0fc86f7 | |||

| b6e961b1f3 | |||

| 874d7ff320 | |||

| e4a5bc9df6 | |||

| 318e0cf9f8 | |||

| bd0815d894 | |||

| af35ecfe66 | |||

| 0c05cd64bb | |||

| cb80b76490 | |||

| 061d4bc758 | |||

| 8ff27f69e1 | |||

| 045cd98276 | |||

| b520843984 | |||

| 92216e4ea8 |

@ -1,5 +1,5 @@

|

||||

[bumpversion]

|

||||

current_version = 2024.6.1

|

||||

current_version = 2024.2.1

|

||||

tag = True

|

||||

commit = True

|

||||

parse = (?P<major>\d+)\.(?P<minor>\d+)\.(?P<patch>\d+)(?:-(?P<rc_t>[a-zA-Z-]+)(?P<rc_n>[1-9]\\d*))?

|

||||

@ -17,14 +17,10 @@ optional_value = final

|

||||

|

||||

[bumpversion:file:pyproject.toml]

|

||||

|

||||

[bumpversion:file:package.json]

|

||||

|

||||

[bumpversion:file:docker-compose.yml]

|

||||

|

||||

[bumpversion:file:schema.yml]

|

||||

|

||||

[bumpversion:file:blueprints/schema.json]

|

||||

|

||||

[bumpversion:file:authentik/__init__.py]

|

||||

|

||||

[bumpversion:file:internal/constants/constants.go]

|

||||

|

||||

2

.github/FUNDING.yml

vendored

2

.github/FUNDING.yml

vendored

@ -1 +1 @@

|

||||

custom: https://goauthentik.io/pricing/

|

||||

github: [BeryJu]

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/question.md

vendored

2

.github/ISSUE_TEMPLATE/question.md

vendored

@ -9,7 +9,7 @@ assignees: ""

|

||||

**Describe your question/**

|

||||

A clear and concise description of what you're trying to do.

|

||||

|

||||

**Relevant info**

|

||||

**Relevant infos**

|

||||

i.e. Version of other software you're using, specifics of your setup

|

||||

|

||||

**Screenshots**

|

||||

|

||||

@ -54,10 +54,9 @@ runs:

|

||||

authentik:

|

||||

outposts:

|

||||

container_image_base: ghcr.io/goauthentik/dev-%(type)s:gh-%(build_hash)s

|

||||

global:

|

||||

image:

|

||||

repository: ghcr.io/goauthentik/dev-server

|

||||

tag: ${{ inputs.tag }}

|

||||

image:

|

||||

repository: ghcr.io/goauthentik/dev-server

|

||||

tag: ${{ inputs.tag }}

|

||||

```

|

||||

|

||||

For arm64, use these values:

|

||||

@ -66,10 +65,9 @@ runs:

|

||||

authentik:

|

||||

outposts:

|

||||

container_image_base: ghcr.io/goauthentik/dev-%(type)s:gh-%(build_hash)s

|

||||

global:

|

||||

image:

|

||||

repository: ghcr.io/goauthentik/dev-server

|

||||

tag: ${{ inputs.tag }}-arm64

|

||||

image:

|

||||

repository: ghcr.io/goauthentik/dev-server

|

||||

tag: ${{ inputs.tag }}-arm64

|

||||

```

|

||||

|

||||

Afterwards, run the upgrade commands from the latest release notes.

|

||||

|

||||

@ -11,10 +11,6 @@ inputs:

|

||||

description: "Docker image arch"

|

||||

|

||||

outputs:

|

||||

shouldBuild:

|

||||

description: "Whether to build image or not"

|

||||

value: ${{ steps.ev.outputs.shouldBuild }}

|

||||

|

||||

sha:

|

||||

description: "sha"

|

||||

value: ${{ steps.ev.outputs.sha }}

|

||||

|

||||

@ -7,12 +7,10 @@ from time import time

|

||||

parser = configparser.ConfigParser()

|

||||

parser.read(".bumpversion.cfg")

|

||||

|

||||

should_build = str(os.environ.get("DOCKER_USERNAME", None) is not None).lower()

|

||||

|

||||

branch_name = os.environ["GITHUB_REF"]

|

||||

if os.environ.get("GITHUB_HEAD_REF", "") != "":

|

||||

branch_name = os.environ["GITHUB_HEAD_REF"]

|

||||

safe_branch_name = branch_name.replace("refs/heads/", "").replace("/", "-").replace("'", "-")

|

||||

safe_branch_name = branch_name.replace("refs/heads/", "").replace("/", "-")

|

||||

|

||||

image_names = os.getenv("IMAGE_NAME").split(",")

|

||||

image_arch = os.getenv("IMAGE_ARCH") or None

|

||||

@ -54,9 +52,8 @@ image_main_tag = image_tags[0]

|

||||

image_tags_rendered = ",".join(image_tags)

|

||||

|

||||

with open(os.environ["GITHUB_OUTPUT"], "a+", encoding="utf-8") as _output:

|

||||

print(f"shouldBuild={should_build}", file=_output)

|

||||

print(f"sha={sha}", file=_output)

|

||||

print(f"version={version}", file=_output)

|

||||

print(f"prerelease={prerelease}", file=_output)

|

||||

print(f"imageTags={image_tags_rendered}", file=_output)

|

||||

print(f"imageMainTag={image_main_tag}", file=_output)

|

||||

print("sha=%s" % sha, file=_output)

|

||||

print("version=%s" % version, file=_output)

|

||||

print("prerelease=%s" % prerelease, file=_output)

|

||||

print("imageTags=%s" % image_tags_rendered, file=_output)

|

||||

print("imageMainTag=%s" % image_main_tag, file=_output)

|

||||

|

||||

8

.github/actions/setup/action.yml

vendored

8

.github/actions/setup/action.yml

vendored

@ -16,25 +16,25 @@ runs:

|

||||

sudo apt-get update

|

||||

sudo apt-get install --no-install-recommends -y libpq-dev openssl libxmlsec1-dev pkg-config gettext

|

||||

- name: Setup python and restore poetry

|

||||

uses: actions/setup-python@v5

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version-file: "pyproject.toml"

|

||||

cache: "poetry"

|

||||

- name: Setup node

|

||||

uses: actions/setup-node@v4

|

||||

uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version-file: web/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: web/package-lock.json

|

||||

- name: Setup go

|

||||

uses: actions/setup-go@v5

|

||||

uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version-file: "go.mod"

|

||||

- name: Setup dependencies

|

||||

shell: bash

|

||||

run: |

|

||||

export PSQL_TAG=${{ inputs.postgresql_version }}

|

||||

docker compose -f .github/actions/setup/docker-compose.yml up -d

|

||||

docker-compose -f .github/actions/setup/docker-compose.yml up -d

|

||||

poetry install

|

||||

cd web && npm ci

|

||||

- name: Generate config

|

||||

|

||||

2

.github/actions/setup/docker-compose.yml

vendored

2

.github/actions/setup/docker-compose.yml

vendored

@ -1,3 +1,5 @@

|

||||

version: "3.7"

|

||||

|

||||

services:

|

||||

postgresql:

|

||||

image: docker.io/library/postgres:${PSQL_TAG:-16}

|

||||

|

||||

1

.github/codespell-words.txt

vendored

1

.github/codespell-words.txt

vendored

@ -4,4 +4,3 @@ hass

|

||||

warmup

|

||||

ontext

|

||||

singed

|

||||

assertIn

|

||||

|

||||

40

.github/dependabot.yml

vendored

40

.github/dependabot.yml

vendored

@ -21,10 +21,7 @@ updates:

|

||||

labels:

|

||||

- dependencies

|

||||

- package-ecosystem: npm

|

||||

directories:

|

||||

- "/web"

|

||||

- "/tests/wdio"

|

||||

- "/web/sfe"

|

||||

directory: "/web"

|

||||

schedule:

|

||||

interval: daily

|

||||

time: "04:00"

|

||||

@ -33,6 +30,7 @@ updates:

|

||||

open-pull-requests-limit: 10

|

||||

commit-message:

|

||||

prefix: "web:"

|

||||

# TODO: deduplicate these groups

|

||||

groups:

|

||||

sentry:

|

||||

patterns:

|

||||

@ -54,10 +52,38 @@ updates:

|

||||

esbuild:

|

||||

patterns:

|

||||

- "@esbuild/*"

|

||||

rollup:

|

||||

- package-ecosystem: npm

|

||||

directory: "/tests/wdio"

|

||||

schedule:

|

||||

interval: daily

|

||||

time: "04:00"

|

||||

labels:

|

||||

- dependencies

|

||||

open-pull-requests-limit: 10

|

||||

commit-message:

|

||||

prefix: "web:"

|

||||

# TODO: deduplicate these groups

|

||||

groups:

|

||||

sentry:

|

||||

patterns:

|

||||

- "@rollup/*"

|

||||

- "rollup-*"

|

||||

- "@sentry/*"

|

||||

- "@spotlightjs/*"

|

||||

babel:

|

||||

patterns:

|

||||

- "@babel/*"

|

||||

- "babel-*"

|

||||

eslint:

|

||||

patterns:

|

||||

- "@typescript-eslint/*"

|

||||

- "eslint"

|

||||

- "eslint-*"

|

||||

storybook:

|

||||

patterns:

|

||||

- "@storybook/*"

|

||||

- "*storybook*"

|

||||

esbuild:

|

||||

patterns:

|

||||

- "@esbuild/*"

|

||||

wdio:

|

||||

patterns:

|

||||

- "@wdio/*"

|

||||

|

||||

65

.github/workflows/api-py-publish.yml

vendored

65

.github/workflows/api-py-publish.yml

vendored

@ -1,65 +0,0 @@

|

||||

name: authentik-api-py-publish

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

paths:

|

||||

- "schema.yml"

|

||||

workflow_dispatch:

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

id-token: write

|

||||

steps:

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

app_id: ${{ secrets.GH_APP_ID }}

|

||||

private_key: ${{ secrets.GH_APP_PRIVATE_KEY }}

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

- name: Install poetry & deps

|

||||

shell: bash

|

||||

run: |

|

||||

pipx install poetry || true

|

||||

sudo apt-get update

|

||||

sudo apt-get install --no-install-recommends -y libpq-dev openssl libxmlsec1-dev pkg-config gettext

|

||||

- name: Setup python and restore poetry

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version-file: "pyproject.toml"

|

||||

cache: "poetry"

|

||||

- name: Generate API Client

|

||||

run: make gen-client-py

|

||||

- name: Publish package

|

||||

working-directory: gen-py-api/

|

||||

run: |

|

||||

poetry build

|

||||

- name: Publish package to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

with:

|

||||

packages-dir: gen-py-api/dist/

|

||||

# We can't easily upgrade the API client being used due to poetry being poetry

|

||||

# so we'll have to rely on dependabot

|

||||

# - name: Upgrade /

|

||||

# run: |

|

||||

# export VERSION=$(cd gen-py-api && poetry version -s)

|

||||

# poetry add "authentik_client=$VERSION" --allow-prereleases --lock

|

||||

# - uses: peter-evans/create-pull-request@v6

|

||||

# id: cpr

|

||||

# with:

|

||||

# token: ${{ steps.generate_token.outputs.token }}

|

||||

# branch: update-root-api-client

|

||||

# commit-message: "root: bump API Client version"

|

||||

# title: "root: bump API Client version"

|

||||

# body: "root: bump API Client version"

|

||||

# delete-branch: true

|

||||

# signoff: true

|

||||

# # ID from https://api.github.com/users/authentik-automation[bot]

|

||||

# author: authentik-automation[bot] <135050075+authentik-automation[bot]@users.noreply.github.com>

|

||||

# - uses: peter-evans/enable-pull-request-automerge@v3

|

||||

# with:

|

||||

# token: ${{ steps.generate_token.outputs.token }}

|

||||

# pull-request-number: ${{ steps.cpr.outputs.pull-request-number }}

|

||||

# merge-method: squash

|

||||

29

.github/workflows/ci-main.yml

vendored

29

.github/workflows/ci-main.yml

vendored

@ -7,6 +7,8 @@ on:

|

||||

- main

|

||||

- next

|

||||

- version-*

|

||||

paths-ignore:

|

||||

- website/**

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

@ -26,7 +28,10 @@ jobs:

|

||||

- bandit

|

||||

- black

|

||||

- codespell

|

||||

- isort

|

||||

- pending-migrations

|

||||

# - pylint

|

||||

- pyright

|

||||

- ruff

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

@ -50,6 +55,7 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

psql:

|

||||

- 12-alpine

|

||||

- 15-alpine

|

||||

- 16-alpine

|

||||

steps:

|

||||

@ -103,6 +109,7 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

psql:

|

||||

- 12-alpine

|

||||

- 15-alpine

|

||||

- 16-alpine

|

||||

steps:

|

||||

@ -128,7 +135,7 @@ jobs:

|

||||

- name: Setup authentik env

|

||||

uses: ./.github/actions/setup

|

||||

- name: Create k8s Kind Cluster

|

||||

uses: helm/kind-action@v1.10.0

|

||||

uses: helm/kind-action@v1.9.0

|

||||

- name: run integration

|

||||

run: |

|

||||

poetry run coverage run manage.py test tests/integration

|

||||

@ -158,8 +165,6 @@ jobs:

|

||||

glob: tests/e2e/test_provider_ldap* tests/e2e/test_source_ldap*

|

||||

- name: radius

|

||||

glob: tests/e2e/test_provider_radius*

|

||||

- name: scim

|

||||

glob: tests/e2e/test_source_scim*

|

||||

- name: flows

|

||||

glob: tests/e2e/test_flows*

|

||||

steps:

|

||||

@ -168,7 +173,7 @@ jobs:

|

||||

uses: ./.github/actions/setup

|

||||

- name: Setup e2e env (chrome, etc)

|

||||

run: |

|

||||

docker compose -f tests/e2e/docker-compose.yml up -d

|

||||

docker-compose -f tests/e2e/docker-compose.yml up -d

|

||||

- id: cache-web

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

@ -214,24 +219,22 @@ jobs:

|

||||

# Needed to upload contianer images to ghcr.io

|

||||

packages: write

|

||||

timeout-minutes: 120

|

||||

if: "github.repository == 'goauthentik/authentik'"

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: ${{ github.event.pull_request.head.sha }}

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3.1.0

|

||||

uses: docker/setup-qemu-action@v3.0.0

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/dev-server

|

||||

image-arch: ${{ matrix.arch }}

|

||||

- name: Login to Container Registry

|

||||

if: ${{ steps.ev.outputs.shouldBuild == 'true' }}

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ghcr.io

|

||||

@ -240,18 +243,18 @@ jobs:

|

||||

- name: generate ts client

|

||||

run: make gen-client-ts

|

||||

- name: Build Docker Image

|

||||

uses: docker/build-push-action@v6

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

secrets: |

|

||||

GEOIPUPDATE_ACCOUNT_ID=${{ secrets.GEOIPUPDATE_ACCOUNT_ID }}

|

||||

GEOIPUPDATE_LICENSE_KEY=${{ secrets.GEOIPUPDATE_LICENSE_KEY }}

|

||||

tags: ${{ steps.ev.outputs.imageTags }}

|

||||

push: ${{ steps.ev.outputs.shouldBuild == 'true' }}

|

||||

push: true

|

||||

build-args: |

|

||||

GIT_BUILD_HASH=${{ steps.ev.outputs.sha }}

|

||||

cache-from: type=registry,ref=ghcr.io/goauthentik/dev-server:buildcache

|

||||

cache-to: type=registry,ref=ghcr.io/goauthentik/dev-server:buildcache,mode=max

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

platforms: linux/${{ matrix.arch }}

|

||||

pr-comment:

|

||||

needs:

|

||||

@ -269,8 +272,6 @@ jobs:

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/dev-server

|

||||

- name: Comment on PR

|

||||

|

||||

16

.github/workflows/ci-outpost.yml

vendored

16

.github/workflows/ci-outpost.yml

vendored

@ -29,7 +29,7 @@ jobs:

|

||||

- name: Generate API

|

||||

run: make gen-client-go

|

||||

- name: golangci-lint

|

||||

uses: golangci/golangci-lint-action@v6

|

||||

uses: golangci/golangci-lint-action@v4

|

||||

with:

|

||||

version: v1.54.2

|

||||

args: --timeout 5000s --verbose

|

||||

@ -71,23 +71,21 @@ jobs:

|

||||

permissions:

|

||||

# Needed to upload contianer images to ghcr.io

|

||||

packages: write

|

||||

if: "github.repository == 'goauthentik/authentik'"

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: ${{ github.event.pull_request.head.sha }}

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3.1.0

|

||||

uses: docker/setup-qemu-action@v3.0.0

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/dev-${{ matrix.type }}

|

||||

- name: Login to Container Registry

|

||||

if: ${{ steps.ev.outputs.shouldBuild == 'true' }}

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ghcr.io

|

||||

@ -96,17 +94,17 @@ jobs:

|

||||

- name: Generate API

|

||||

run: make gen-client-go

|

||||

- name: Build Docker Image

|

||||

uses: docker/build-push-action@v6

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

tags: ${{ steps.ev.outputs.imageTags }}

|

||||

file: ${{ matrix.type }}.Dockerfile

|

||||

push: ${{ steps.ev.outputs.shouldBuild == 'true' }}

|

||||

push: true

|

||||

build-args: |

|

||||

GIT_BUILD_HASH=${{ steps.ev.outputs.sha }}

|

||||

platforms: linux/amd64,linux/arm64

|

||||

context: .

|

||||

cache-from: type=registry,ref=ghcr.io/goauthentik/dev-${{ matrix.type }}:buildcache

|

||||

cache-to: type=registry,ref=ghcr.io/goauthentik/dev-${{ matrix.type }}:buildcache,mode=max

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

build-binary:

|

||||

timeout-minutes: 120

|

||||

needs:

|

||||

|

||||

114

.github/workflows/ci-web.yml

vendored

114

.github/workflows/ci-web.yml

vendored

@ -12,36 +12,14 @@ on:

|

||||

- version-*

|

||||

|

||||

jobs:

|

||||

lint:

|

||||

lint-eslint:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

command:

|

||||

- lint

|

||||

- lint:lockfile

|

||||

- tsc

|

||||

- prettier-check

|

||||

project:

|

||||

- web

|

||||

- tests/wdio

|

||||

include:

|

||||

- command: tsc

|

||||

project: web

|

||||

extra_setup: |

|

||||

cd sfe/ && npm ci

|

||||

- command: lit-analyse

|

||||

project: web

|

||||

extra_setup: |

|

||||

# lit-analyse doesn't understand path rewrites, so make it

|

||||

# belive it's an actual module

|

||||

cd node_modules/@goauthentik

|

||||

ln -s ../../src/ web

|

||||

exclude:

|

||||

- command: lint:lockfile

|

||||

project: tests/wdio

|

||||

- command: tsc

|

||||

project: tests/wdio

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

@ -50,17 +28,77 @@ jobs:

|

||||

cache: "npm"

|

||||

cache-dependency-path: ${{ matrix.project }}/package-lock.json

|

||||

- working-directory: ${{ matrix.project }}/

|

||||

run: |

|

||||

npm ci

|

||||

${{ matrix.extra_setup }}

|

||||

run: npm ci

|

||||

- name: Generate API

|

||||

run: make gen-client-ts

|

||||

- name: Lint

|

||||

- name: Eslint

|

||||

working-directory: ${{ matrix.project }}/

|

||||

run: npm run ${{ matrix.command }}

|

||||

run: npm run lint

|

||||

lint-build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: web/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: web/package-lock.json

|

||||

- working-directory: web/

|

||||

run: npm ci

|

||||

- name: Generate API

|

||||

run: make gen-client-ts

|

||||

- name: TSC

|

||||

working-directory: web/

|

||||

run: npm run tsc

|

||||

lint-prettier:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

project:

|

||||

- web

|

||||

- tests/wdio

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: ${{ matrix.project }}/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: ${{ matrix.project }}/package-lock.json

|

||||

- working-directory: ${{ matrix.project }}/

|

||||

run: npm ci

|

||||

- name: Generate API

|

||||

run: make gen-client-ts

|

||||

- name: prettier

|

||||

working-directory: ${{ matrix.project }}/

|

||||

run: npm run prettier-check

|

||||

lint-lit-analyse:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: web/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: web/package-lock.json

|

||||

- working-directory: web/

|

||||

run: |

|

||||

npm ci

|

||||

# lit-analyse doesn't understand path rewrites, so make it

|

||||

# belive it's an actual module

|

||||

cd node_modules/@goauthentik

|

||||

ln -s ../../src/ web

|

||||

- name: Generate API

|

||||

run: make gen-client-ts

|

||||

- name: lit-analyse

|

||||

working-directory: web/

|

||||

run: npm run lit-analyse

|

||||

ci-web-mark:

|

||||

needs:

|

||||

- lint

|

||||

- lint-eslint

|

||||

- lint-prettier

|

||||

- lint-lit-analyse

|

||||

- lint-build

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- run: echo mark

|

||||

@ -82,21 +120,3 @@ jobs:

|

||||

- name: build

|

||||

working-directory: web/

|

||||

run: npm run build

|

||||

test:

|

||||

needs:

|

||||

- ci-web-mark

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: web/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: web/package-lock.json

|

||||

- working-directory: web/

|

||||

run: npm ci

|

||||

- name: Generate API

|

||||

run: make gen-client-ts

|

||||

- name: test

|

||||

working-directory: web/

|

||||

run: npm run test

|

||||

|

||||

20

.github/workflows/ci-website.yml

vendored

20

.github/workflows/ci-website.yml

vendored

@ -12,21 +12,20 @@ on:

|

||||

- version-*

|

||||

|

||||

jobs:

|

||||

lint:

|

||||

lint-prettier:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

command:

|

||||

- lint:lockfile

|

||||

- prettier-check

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: website/package.json

|

||||

cache: "npm"

|

||||

cache-dependency-path: website/package-lock.json

|

||||

- working-directory: website/

|

||||

run: npm ci

|

||||

- name: Lint

|

||||

- name: prettier

|

||||

working-directory: website/

|

||||

run: npm run ${{ matrix.command }}

|

||||

run: npm run prettier-check

|

||||

test:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

@ -49,6 +48,7 @@ jobs:

|

||||

matrix:

|

||||

job:

|

||||

- build

|

||||

- build-docs-only

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-node@v4

|

||||

@ -63,7 +63,7 @@ jobs:

|

||||

run: npm run ${{ matrix.job }}

|

||||

ci-website-mark:

|

||||

needs:

|

||||

- lint

|

||||

- lint-prettier

|

||||

- test

|

||||

- build

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

43

.github/workflows/gen-update-webauthn-mds.yml

vendored

43

.github/workflows/gen-update-webauthn-mds.yml

vendored

@ -1,43 +0,0 @@

|

||||

name: authentik-gen-update-webauthn-mds

|

||||

on:

|

||||

workflow_dispatch:

|

||||

schedule:

|

||||

- cron: '30 1 1,15 * *'

|

||||

|

||||

env:

|

||||

POSTGRES_DB: authentik

|

||||

POSTGRES_USER: authentik

|

||||

POSTGRES_PASSWORD: "EK-5jnKfjrGRm<77"

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

app_id: ${{ secrets.GH_APP_ID }}

|

||||

private_key: ${{ secrets.GH_APP_PRIVATE_KEY }}

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

- name: Setup authentik env

|

||||

uses: ./.github/actions/setup

|

||||

- run: poetry run ak update_webauthn_mds

|

||||

- uses: peter-evans/create-pull-request@v6

|

||||

id: cpr

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

branch: update-fido-mds-client

|

||||

commit-message: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

title: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

body: "stages/authenticator_webauthn: Update FIDO MDS3 & Passkey aaguid blobs"

|

||||

delete-branch: true

|

||||

signoff: true

|

||||

# ID from https://api.github.com/users/authentik-automation[bot]

|

||||

author: authentik-automation[bot] <135050075+authentik-automation[bot]@users.noreply.github.com>

|

||||

- uses: peter-evans/enable-pull-request-automerge@v3

|

||||

with:

|

||||

token: ${{ steps.generate_token.outputs.token }}

|

||||

pull-request-number: ${{ steps.cpr.outputs.pull-request-number }}

|

||||

merge-method: squash

|

||||

26

.github/workflows/release-publish.yml

vendored

26

.github/workflows/release-publish.yml

vendored

@ -14,14 +14,12 @@ jobs:

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3.1.0

|

||||

uses: docker/setup-qemu-action@v3.0.0

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/server,beryju/authentik

|

||||

- name: Docker Login Registry

|

||||

@ -40,7 +38,7 @@ jobs:

|

||||

mkdir -p ./gen-ts-api

|

||||

mkdir -p ./gen-go-api

|

||||

- name: Build Docker Image

|

||||

uses: docker/build-push-action@v6

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

@ -68,14 +66,12 @@ jobs:

|

||||

with:

|

||||

go-version-file: "go.mod"

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3.1.0

|

||||

uses: docker/setup-qemu-action@v3.0.0

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/${{ matrix.type }},beryju/authentik-${{ matrix.type }}

|

||||

- name: make empty clients

|

||||

@ -94,7 +90,7 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

- name: Build Docker Image

|

||||

uses: docker/build-push-action@v6

|

||||

uses: docker/build-push-action@v5

|

||||

with:

|

||||

push: true

|

||||

tags: ${{ steps.ev.outputs.imageTags }}

|

||||

@ -155,12 +151,12 @@ jobs:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Run test suite in final docker images

|

||||

run: |

|

||||

echo "PG_PASS=$(openssl rand 32 | base64 -w 0)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand 32 | base64 -w 0)" >> .env

|

||||

docker compose pull -q

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker-compose pull -q

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

sentry-release:

|

||||

needs:

|

||||

- build-server

|

||||

@ -172,8 +168,6 @@ jobs:

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/server

|

||||

- name: Get static files from docker image

|

||||

|

||||

12

.github/workflows/release-tag.yml

vendored

12

.github/workflows/release-tag.yml

vendored

@ -14,16 +14,16 @@ jobs:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Pre-release test

|

||||

run: |

|

||||

echo "PG_PASS=$(openssl rand 32 | base64 -w 0)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand 32 | base64 -w 0)" >> .env

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker buildx install

|

||||

mkdir -p ./gen-ts-api

|

||||

docker build -t testing:latest .

|

||||

echo "AUTHENTIK_IMAGE=testing" >> .env

|

||||

echo "AUTHENTIK_TAG=latest" >> .env

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

- id: generate_token

|

||||

uses: tibdex/github-app-token@v2

|

||||

with:

|

||||

@ -32,8 +32,6 @@ jobs:

|

||||

- name: prepare variables

|

||||

uses: ./.github/actions/docker-push-variables

|

||||

id: ev

|

||||

env:

|

||||

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

|

||||

with:

|

||||

image-name: ghcr.io/goauthentik/server

|

||||

- name: Create Release

|

||||

|

||||

2

.github/workflows/repo-stale.yml

vendored

2

.github/workflows/repo-stale.yml

vendored

@ -23,7 +23,7 @@ jobs:

|

||||

repo-token: ${{ steps.generate_token.outputs.token }}

|

||||

days-before-stale: 60

|

||||

days-before-close: 7

|

||||

exempt-issue-labels: pinned,security,pr_wanted,enhancement,bug/confirmed,enhancement/confirmed,question,status/reviewing

|

||||

exempt-issue-labels: pinned,security,pr_wanted,enhancement,bug/confirmed,enhancement/confirmed,question

|

||||

stale-issue-label: wontfix

|

||||

stale-issue-message: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

name: authentik-api-ts-publish

|

||||

name: authentik-web-api-publish

|

||||

on:

|

||||

push:

|

||||

branches: [main]

|

||||

@ -31,12 +31,7 @@ jobs:

|

||||

env:

|

||||

NODE_AUTH_TOKEN: ${{ secrets.NPM_PUBLISH_TOKEN }}

|

||||

- name: Upgrade /web

|

||||

working-directory: web

|

||||

run: |

|

||||

export VERSION=`node -e 'console.log(require("../gen-ts-api/package.json").version)'`

|

||||

npm i @goauthentik/api@$VERSION

|

||||

- name: Upgrade /web/sfe

|

||||

working-directory: web/sfe

|

||||

working-directory: web/

|

||||

run: |

|

||||

export VERSION=`node -e 'console.log(require("../gen-ts-api/package.json").version)'`

|

||||

npm i @goauthentik/api@$VERSION

|

||||

3

.vscode/extensions.json

vendored

3

.vscode/extensions.json

vendored

@ -10,7 +10,8 @@

|

||||

"Gruntfuggly.todo-tree",

|

||||

"mechatroner.rainbow-csv",

|

||||

"ms-python.black-formatter",

|

||||

"charliermarsh.ruff",

|

||||

"ms-python.isort",

|

||||

"ms-python.pylint",

|

||||

"ms-python.python",

|

||||

"ms-python.vscode-pylance",

|

||||

"ms-python.black-formatter",

|

||||

|

||||

13

.vscode/settings.json

vendored

13

.vscode/settings.json

vendored

@ -4,21 +4,20 @@

|

||||

"asgi",

|

||||

"authentik",

|

||||

"authn",

|

||||

"entra",

|

||||

"goauthentik",

|

||||

"jwks",

|

||||

"kubernetes",

|

||||

"oidc",

|

||||

"openid",

|

||||

"passwordless",

|

||||

"plex",

|

||||

"saml",

|

||||

"scim",

|

||||

"slo",

|

||||

"sso",

|

||||

"totp",

|

||||

"traefik",

|

||||

"webauthn",

|

||||

"traefik",

|

||||

"passwordless",

|

||||

"kubernetes",

|

||||

"sso",

|

||||

"slo",

|

||||

"scim",

|

||||

],

|

||||

"todo-tree.tree.showCountsInTree": true,

|

||||

"todo-tree.tree.showBadges": true,

|

||||

|

||||

52

Dockerfile

52

Dockerfile

@ -1,7 +1,7 @@

|

||||

# syntax=docker/dockerfile:1

|

||||

|

||||

# Stage 1: Build website

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:22 as website-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:21 as website-builder

|

||||

|

||||

ENV NODE_ENV=production

|

||||

|

||||

@ -14,41 +14,30 @@ RUN --mount=type=bind,target=/work/website/package.json,src=./website/package.js

|

||||

|

||||

COPY ./website /work/website/

|

||||

COPY ./blueprints /work/blueprints/

|

||||

COPY ./schema.yml /work/

|

||||

COPY ./SECURITY.md /work/

|

||||

|

||||

RUN npm run build-bundled

|

||||

RUN npm run build-docs-only

|

||||

|

||||

# Stage 2: Build webui

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:22 as web-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/node:21 as web-builder

|

||||

|

||||

ARG GIT_BUILD_HASH

|

||||

ENV GIT_BUILD_HASH=$GIT_BUILD_HASH

|

||||

ENV NODE_ENV=production

|

||||

|

||||

WORKDIR /work/web

|

||||

|

||||

RUN --mount=type=bind,target=/work/web/package.json,src=./web/package.json \

|

||||

--mount=type=bind,target=/work/web/package-lock.json,src=./web/package-lock.json \

|

||||

--mount=type=bind,target=/work/web/sfe/package.json,src=./web/sfe/package.json \

|

||||

--mount=type=bind,target=/work/web/sfe/package-lock.json,src=./web/sfe/package-lock.json \

|

||||

--mount=type=bind,target=/work/web/scripts,src=./web/scripts \

|

||||

--mount=type=cache,id=npm-web,sharing=shared,target=/root/.npm \

|

||||

npm ci --include=dev && \

|

||||

cd sfe && \

|

||||

npm ci --include=dev

|

||||

|

||||

COPY ./package.json /work

|

||||

COPY ./web /work/web/

|

||||

COPY ./website /work/website/

|

||||

COPY ./gen-ts-api /work/web/node_modules/@goauthentik/api

|

||||

|

||||

RUN npm run build && \

|

||||

cd sfe && \

|

||||

npm run build

|

||||

RUN npm run build

|

||||

|

||||

# Stage 3: Build go proxy

|

||||

FROM --platform=${BUILDPLATFORM} mcr.microsoft.com/oss/go/microsoft/golang:1.22-fips-bookworm AS go-builder

|

||||

FROM --platform=${BUILDPLATFORM} docker.io/golang:1.22.0-bookworm AS go-builder

|

||||

|

||||

ARG TARGETOS

|

||||

ARG TARGETARCH

|

||||

@ -59,11 +48,6 @@ ARG GOARCH=$TARGETARCH

|

||||

|

||||

WORKDIR /go/src/goauthentik.io

|

||||

|

||||

RUN --mount=type=cache,id=apt-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/var/cache/apt \

|

||||

dpkg --add-architecture arm64 && \

|

||||

apt-get update && \

|

||||

apt-get install -y --no-install-recommends crossbuild-essential-arm64 gcc-aarch64-linux-gnu

|

||||

|

||||

RUN --mount=type=bind,target=/go/src/goauthentik.io/go.mod,src=./go.mod \

|

||||

--mount=type=bind,target=/go/src/goauthentik.io/go.sum,src=./go.sum \

|

||||

--mount=type=cache,target=/go/pkg/mod \

|

||||

@ -78,17 +62,17 @@ COPY ./internal /go/src/goauthentik.io/internal

|

||||

COPY ./go.mod /go/src/goauthentik.io/go.mod

|

||||

COPY ./go.sum /go/src/goauthentik.io/go.sum

|

||||

|

||||

ENV CGO_ENABLED=0

|

||||

|

||||

RUN --mount=type=cache,sharing=locked,target=/go/pkg/mod \

|

||||

--mount=type=cache,id=go-build-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/root/.cache/go-build \

|

||||

if [ "$TARGETARCH" = "arm64" ]; then export CC=aarch64-linux-gnu-gcc && export CC_FOR_TARGET=gcc-aarch64-linux-gnu; fi && \

|

||||

CGO_ENABLED=1 GOEXPERIMENT="systemcrypto" GOFLAGS="-tags=requirefips" GOARM="${TARGETVARIANT#v}" \

|

||||

go build -o /go/authentik ./cmd/server

|

||||

GOARM="${TARGETVARIANT#v}" go build -o /go/authentik ./cmd/server

|

||||

|

||||

# Stage 4: MaxMind GeoIP

|

||||

FROM --platform=${BUILDPLATFORM} ghcr.io/maxmind/geoipupdate:v7.0.1 as geoip

|

||||

FROM --platform=${BUILDPLATFORM} ghcr.io/maxmind/geoipupdate:v6.1 as geoip

|

||||

|

||||

ENV GEOIPUPDATE_EDITION_IDS="GeoLite2-City GeoLite2-ASN"

|

||||

ENV GEOIPUPDATE_VERBOSE="1"

|

||||

ENV GEOIPUPDATE_VERBOSE="true"

|

||||

ENV GEOIPUPDATE_ACCOUNT_ID_FILE="/run/secrets/GEOIPUPDATE_ACCOUNT_ID"

|

||||

ENV GEOIPUPDATE_LICENSE_KEY_FILE="/run/secrets/GEOIPUPDATE_LICENSE_KEY"

|

||||

|

||||

@ -99,7 +83,7 @@ RUN --mount=type=secret,id=GEOIPUPDATE_ACCOUNT_ID \

|

||||

/bin/sh -c "/usr/bin/entry.sh || echo 'Failed to get GeoIP database, disabling'; exit 0"

|

||||

|

||||

# Stage 5: Python dependencies

|

||||

FROM ghcr.io/goauthentik/fips-python:3.12.3-slim-bookworm-fips-full AS python-deps

|

||||

FROM docker.io/python:3.12.2-slim-bookworm AS python-deps

|

||||

|

||||

WORKDIR /ak-root/poetry

|

||||

|

||||

@ -112,21 +96,19 @@ RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloa

|

||||

RUN --mount=type=cache,id=apt-$TARGETARCH$TARGETVARIANT,sharing=locked,target=/var/cache/apt \

|

||||

apt-get update && \

|

||||

# Required for installing pip packages

|

||||

apt-get install -y --no-install-recommends build-essential pkg-config libpq-dev

|

||||

apt-get install -y --no-install-recommends build-essential pkg-config libxmlsec1-dev zlib1g-dev libpq-dev

|

||||

|

||||

RUN --mount=type=bind,target=./pyproject.toml,src=./pyproject.toml \

|

||||

--mount=type=bind,target=./poetry.lock,src=./poetry.lock \

|

||||

--mount=type=cache,target=/root/.cache/pip \

|

||||

--mount=type=cache,target=/root/.cache/pypoetry \

|

||||

python -m venv /ak-root/venv/ && \

|

||||

bash -c "source ${VENV_PATH}/bin/activate && \

|

||||

pip3 install --upgrade pip && \

|

||||

pip3 install poetry && \

|

||||

poetry install --only=main --no-ansi --no-interaction --no-root && \

|

||||

pip install --force-reinstall /wheels/*"

|

||||

poetry install --only=main --no-ansi --no-interaction

|

||||

|

||||

# Stage 6: Run

|

||||

FROM ghcr.io/goauthentik/fips-python:3.12.3-slim-bookworm-fips-full AS final-image

|

||||

FROM docker.io/python:3.12.2-slim-bookworm AS final-image

|

||||

|

||||

ARG GIT_BUILD_HASH

|

||||

ARG VERSION

|

||||

@ -143,7 +125,7 @@ WORKDIR /

|

||||

# We cannot cache this layer otherwise we'll end up with a bigger image

|

||||

RUN apt-get update && \

|

||||

# Required for runtime

|

||||

apt-get install -y --no-install-recommends libpq5 libmaxminddb0 ca-certificates && \

|

||||

apt-get install -y --no-install-recommends libpq5 openssl libxmlsec1-openssl libmaxminddb0 ca-certificates && \

|

||||

# Required for bootstrap & healtcheck

|

||||

apt-get install -y --no-install-recommends runit && \

|

||||

apt-get clean && \

|

||||

@ -167,7 +149,7 @@ COPY --from=go-builder /go/authentik /bin/authentik

|

||||

COPY --from=python-deps /ak-root/venv /ak-root/venv

|

||||

COPY --from=web-builder /work/web/dist/ /web/dist/

|

||||

COPY --from=web-builder /work/web/authentik/ /web/authentik/

|

||||

COPY --from=website-builder /work/website/build/ /website/help/

|

||||

COPY --from=website-builder /work/website/help/ /website/help/

|

||||

COPY --from=geoip /usr/share/GeoIP /geoip

|

||||

|

||||

USER 1000

|

||||

@ -179,8 +161,6 @@ ENV TMPDIR=/dev/shm/ \

|

||||

VENV_PATH="/ak-root/venv" \

|

||||

POETRY_VIRTUALENVS_CREATE=false

|

||||

|

||||

ENV GOFIPS=1

|

||||

|

||||

HEALTHCHECK --interval=30s --timeout=30s --start-period=60s --retries=3 CMD [ "ak", "healthcheck" ]

|

||||

|

||||

ENTRYPOINT [ "dumb-init", "--", "ak" ]

|

||||

|

||||

56

Makefile

56

Makefile

@ -9,7 +9,6 @@ PY_SOURCES = authentik tests scripts lifecycle .github

|

||||

DOCKER_IMAGE ?= "authentik:test"

|

||||

|

||||

GEN_API_TS = "gen-ts-api"

|

||||

GEN_API_PY = "gen-py-api"

|

||||

GEN_API_GO = "gen-go-api"

|

||||

|

||||

pg_user := $(shell python -m authentik.lib.config postgresql.user 2>/dev/null)

|

||||

@ -19,7 +18,6 @@ pg_name := $(shell python -m authentik.lib.config postgresql.name 2>/dev/null)

|

||||

CODESPELL_ARGS = -D - -D .github/codespell-dictionary.txt \

|

||||

-I .github/codespell-words.txt \

|

||||

-S 'web/src/locales/**' \

|

||||

-S 'website/developer-docs/api/reference/**' \

|

||||

authentik \

|

||||

internal \

|

||||

cmd \

|

||||

@ -47,12 +45,12 @@ test-go:

|

||||

go test -timeout 0 -v -race -cover ./...

|

||||

|

||||

test-docker: ## Run all tests in a docker-compose

|

||||

echo "PG_PASS=$(shell openssl rand 32 | base64 -w 0)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(shell openssl rand 32 | base64 -w 0)" >> .env

|

||||

docker compose pull -q

|

||||

docker compose up --no-start

|

||||

docker compose start postgresql redis

|

||||

docker compose run -u root server test-all

|

||||

echo "PG_PASS=$(openssl rand -base64 32)" >> .env

|

||||

echo "AUTHENTIK_SECRET_KEY=$(openssl rand -base64 32)" >> .env

|

||||

docker-compose pull -q

|

||||

docker-compose up --no-start

|

||||

docker-compose start postgresql redis

|

||||

docker-compose run -u root server test-all

|

||||

rm -f .env

|

||||

|

||||

test: ## Run the server tests and produce a coverage report (locally)

|

||||

@ -60,15 +58,16 @@ test: ## Run the server tests and produce a coverage report (locally)

|

||||

coverage html

|

||||

coverage report

|

||||

|

||||

lint-fix: lint-codespell ## Lint and automatically fix errors in the python source code. Reports spelling errors.

|

||||

lint-fix: ## Lint and automatically fix errors in the python source code. Reports spelling errors.

|

||||

isort $(PY_SOURCES)

|

||||

black $(PY_SOURCES)

|

||||

ruff check --fix $(PY_SOURCES)

|

||||

|

||||

lint-codespell: ## Reports spelling errors.

|

||||

ruff --fix $(PY_SOURCES)

|

||||

codespell -w $(CODESPELL_ARGS)

|

||||

|

||||

lint: ## Lint the python and golang sources

|

||||

bandit -r $(PY_SOURCES) -x web/node_modules -x tests/wdio/node_modules -x website/node_modules

|

||||

bandit -r $(PY_SOURCES) -x node_modules

|

||||

./web/node_modules/.bin/pyright $(PY_SOURCES)

|

||||

pylint $(PY_SOURCES)

|

||||

golangci-lint run -v

|

||||

|

||||

core-install:

|

||||

@ -141,10 +140,7 @@ gen-clean-ts: ## Remove generated API client for Typescript

|

||||

gen-clean-go: ## Remove generated API client for Go

|

||||

rm -rf ./${GEN_API_GO}/

|

||||

|

||||

gen-clean-py: ## Remove generated API client for Python

|

||||

rm -rf ./${GEN_API_PY}/

|

||||

|

||||

gen-clean: gen-clean-ts gen-clean-go gen-clean-py ## Remove generated API clients

|

||||

gen-clean: gen-clean-ts gen-clean-go ## Remove generated API clients

|

||||

|

||||

gen-client-ts: gen-clean-ts ## Build and install the authentik API for Typescript into the authentik UI Application

|

||||

docker run \

|

||||

@ -162,20 +158,6 @@ gen-client-ts: gen-clean-ts ## Build and install the authentik API for Typescri

|

||||

cd ./${GEN_API_TS} && npm i

|

||||

\cp -rf ./${GEN_API_TS}/* web/node_modules/@goauthentik/api

|

||||

|

||||

gen-client-py: gen-clean-py ## Build and install the authentik API for Python

|

||||

docker run \

|

||||

--rm -v ${PWD}:/local \

|

||||

--user ${UID}:${GID} \

|

||||

docker.io/openapitools/openapi-generator-cli:v7.4.0 generate \

|

||||

-i /local/schema.yml \

|

||||

-g python \

|

||||

-o /local/${GEN_API_PY} \

|

||||

-c /local/scripts/api-py-config.yaml \

|

||||

--additional-properties=packageVersion=${NPM_VERSION} \

|

||||

--git-repo-id authentik \

|

||||

--git-user-id goauthentik

|

||||

pip install ./${GEN_API_PY}

|

||||

|

||||

gen-client-go: gen-clean-go ## Build and install the authentik API for Golang

|

||||

mkdir -p ./${GEN_API_GO} ./${GEN_API_GO}/templates

|

||||

wget https://raw.githubusercontent.com/goauthentik/client-go/main/config.yaml -O ./${GEN_API_GO}/config.yaml

|

||||

@ -241,7 +223,7 @@ website: website-lint-fix website-build ## Automatically fix formatting issues

|

||||

website-install:

|

||||

cd website && npm ci

|

||||

|

||||

website-lint-fix: lint-codespell

|

||||

website-lint-fix:

|

||||

cd website && npm run prettier

|

||||

|

||||

website-build:

|

||||

@ -255,7 +237,6 @@ website-watch: ## Build and watch the documentation website, updating automatic

|

||||

#########################

|

||||

|

||||

docker: ## Build a docker image of the current source tree

|

||||

mkdir -p ${GEN_API_TS}

|

||||

DOCKER_BUILDKIT=1 docker build . --progress plain --tag ${DOCKER_IMAGE}

|

||||

|

||||

#########################

|

||||

@ -268,6 +249,9 @@ ci--meta-debug:

|

||||

python -V

|

||||

node --version

|

||||

|

||||

ci-pylint: ci--meta-debug

|

||||

pylint $(PY_SOURCES)

|

||||

|

||||

ci-black: ci--meta-debug

|

||||

black --check $(PY_SOURCES)

|

||||

|

||||

@ -277,8 +261,14 @@ ci-ruff: ci--meta-debug

|

||||

ci-codespell: ci--meta-debug

|

||||

codespell $(CODESPELL_ARGS) -s

|

||||

|

||||

ci-isort: ci--meta-debug

|

||||

isort --check $(PY_SOURCES)

|

||||

|

||||

ci-bandit: ci--meta-debug

|

||||

bandit -r $(PY_SOURCES)

|

||||

|

||||

ci-pyright: ci--meta-debug

|

||||

./web/node_modules/.bin/pyright $(PY_SOURCES)

|

||||

|

||||

ci-pending-migrations: ci--meta-debug

|

||||

ak makemigrations --check

|

||||

|

||||

@ -25,10 +25,10 @@ For bigger setups, there is a Helm Chart [here](https://github.com/goauthentik/h

|

||||

|

||||

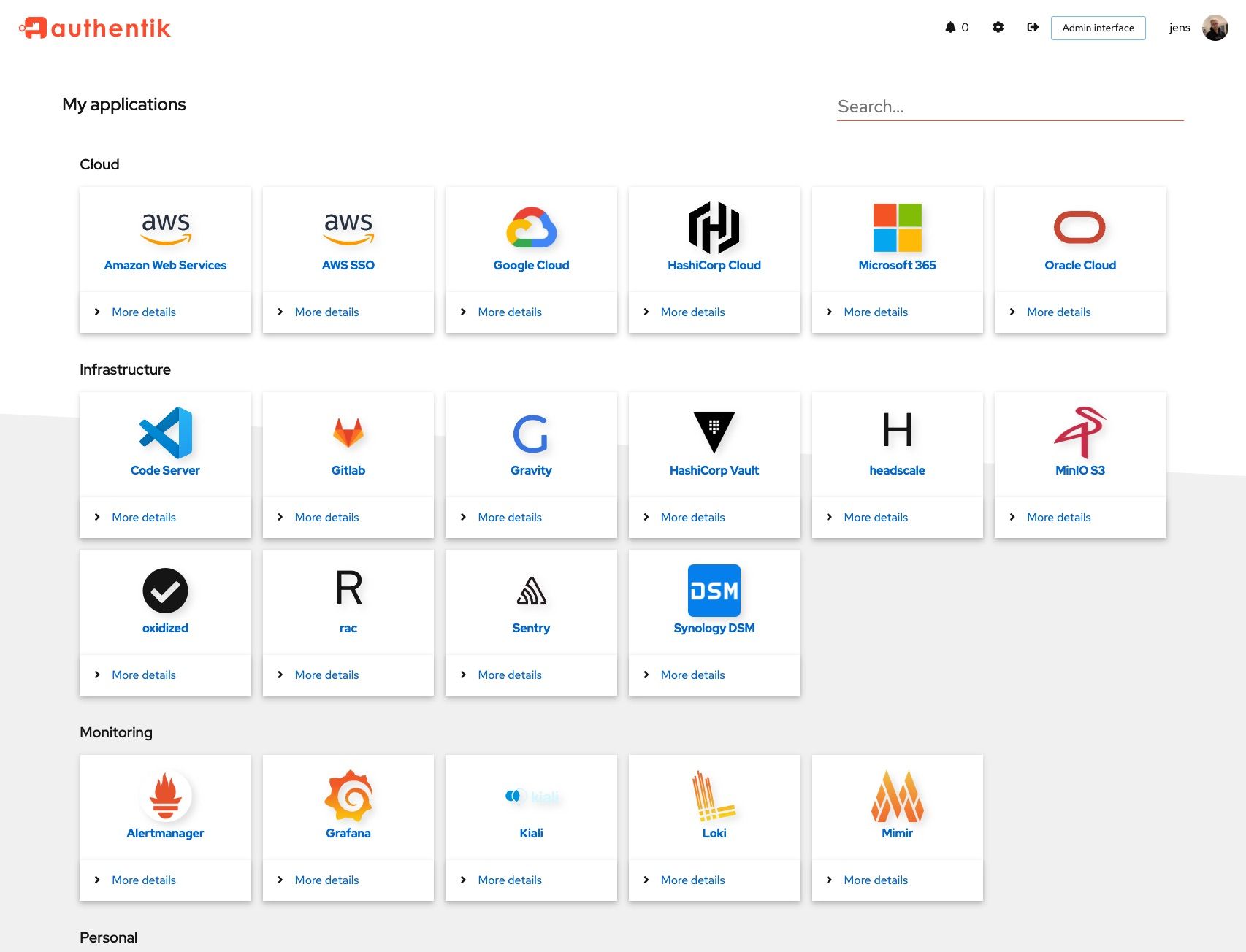

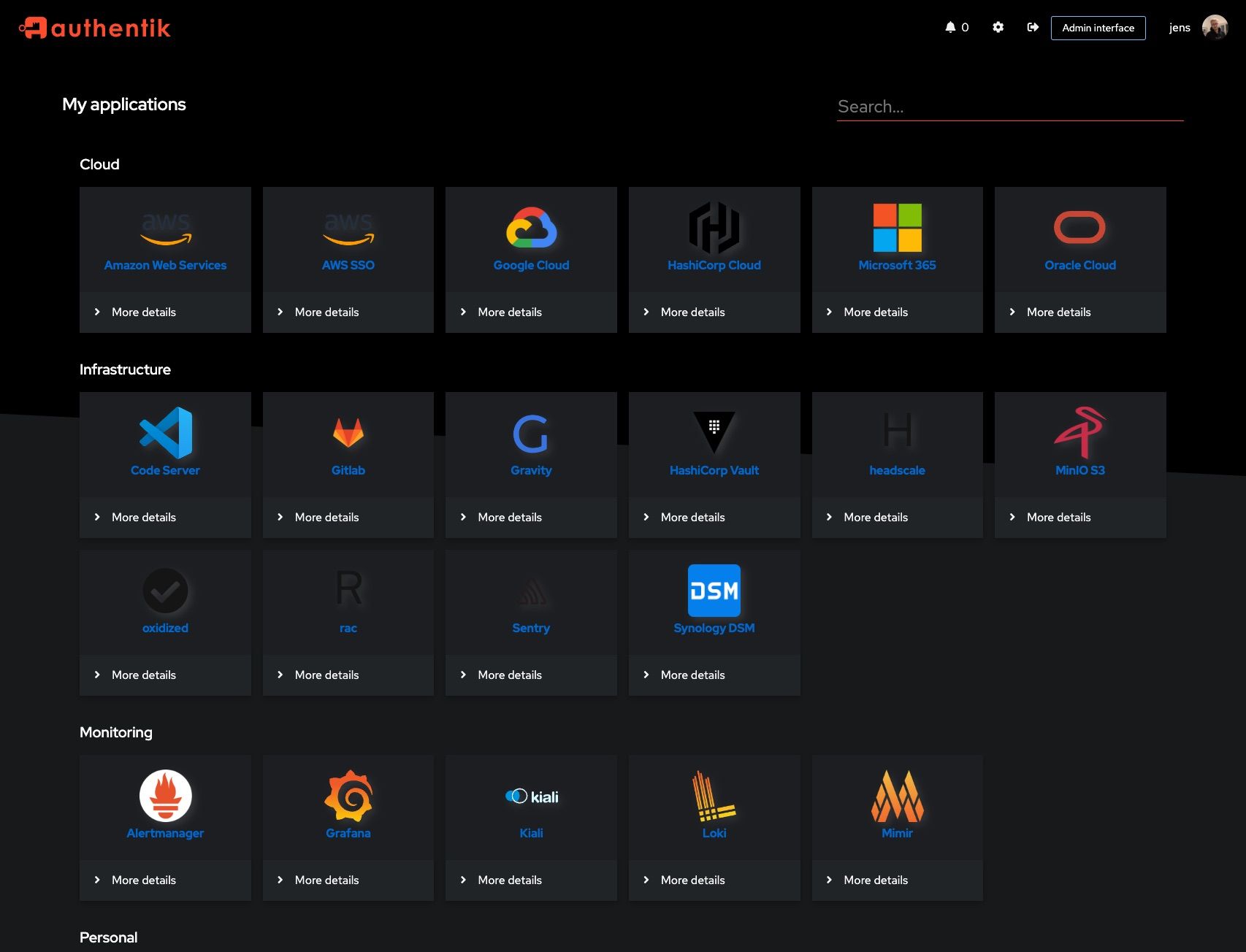

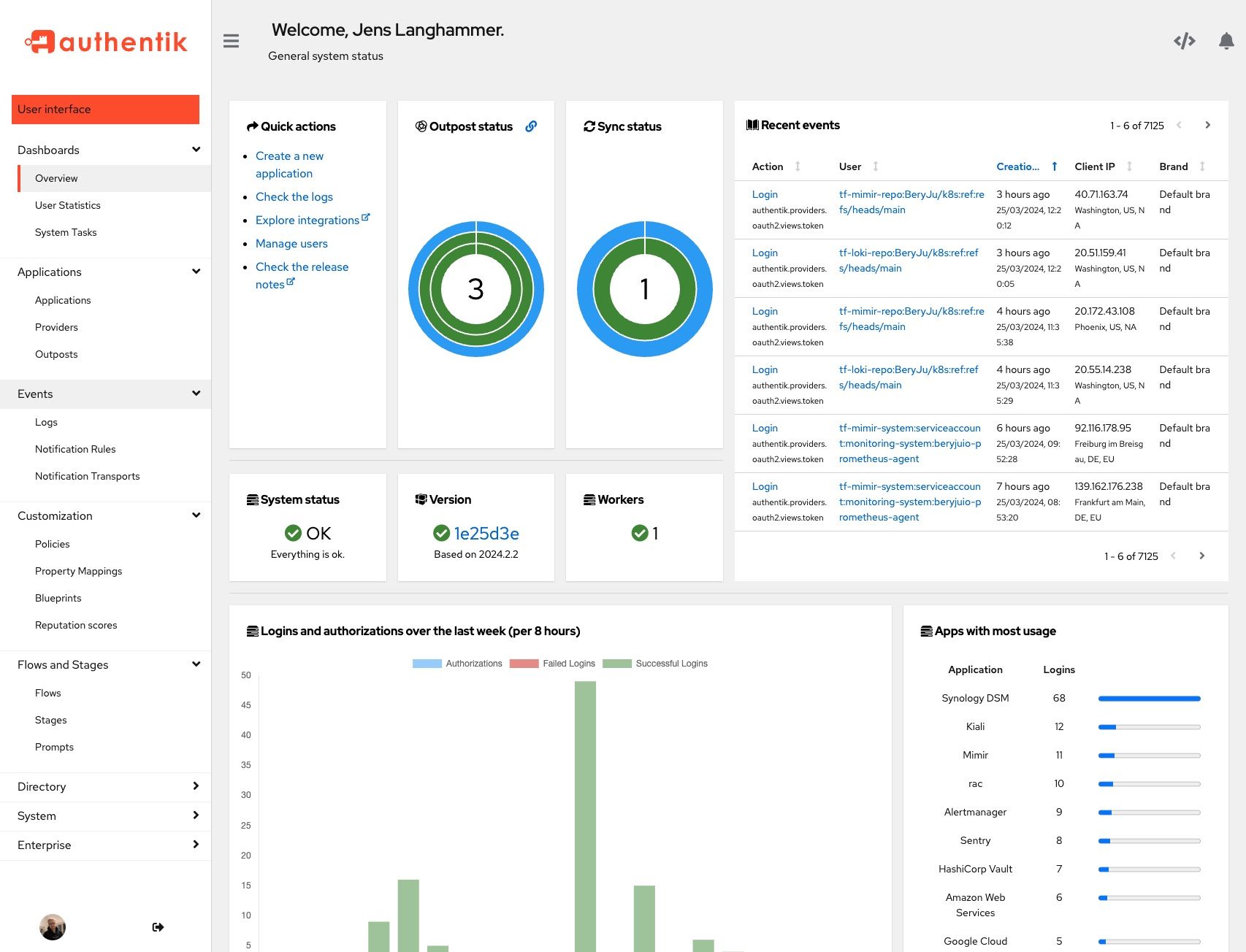

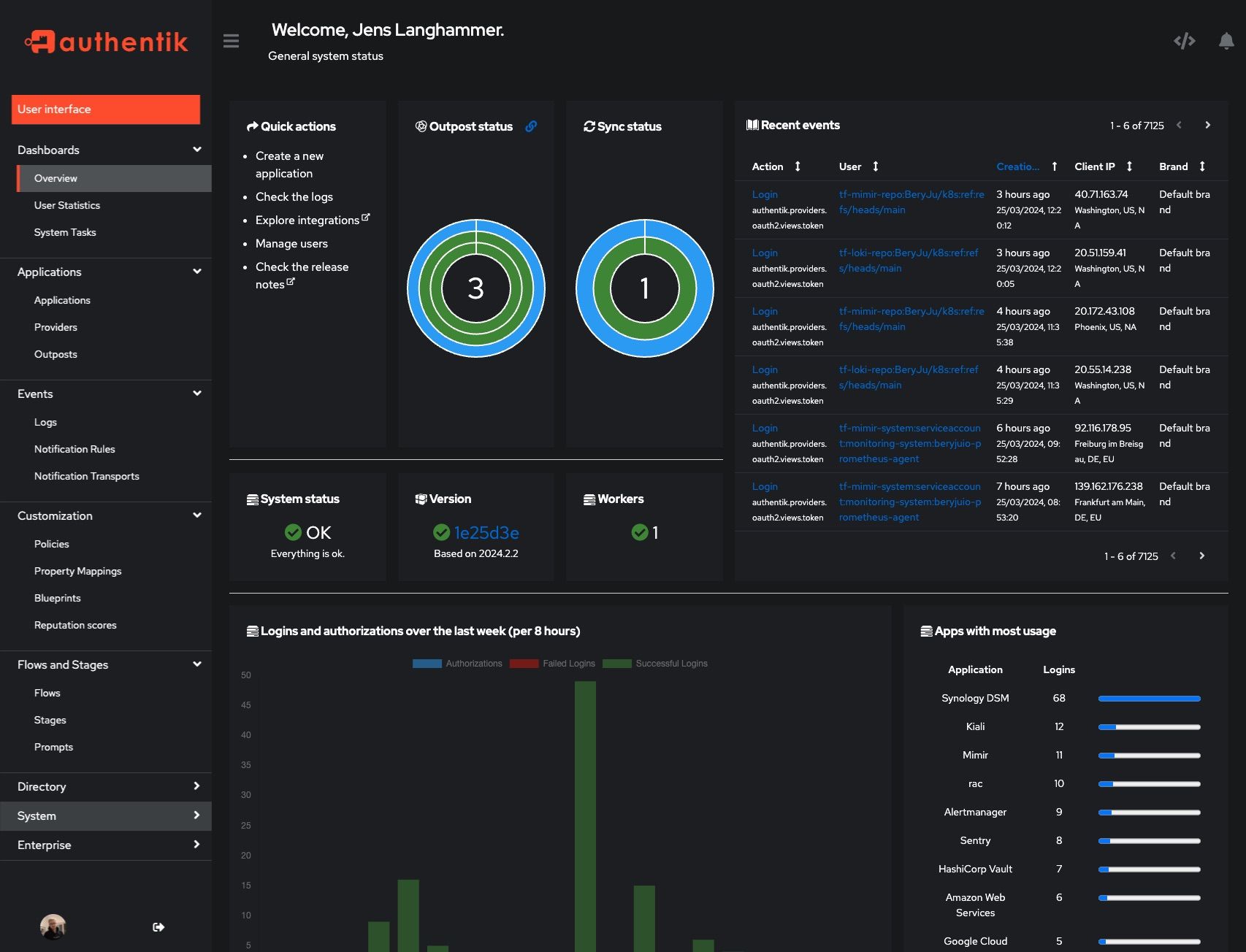

## Screenshots

|

||||

|

||||

| Light | Dark |

|

||||

| ----------------------------------------------------------- | ---------------------------------------------------------- |

|

||||

|  |  |

|

||||

|  |  |

|

||||

| Light | Dark |

|

||||

| ------------------------------------------------------ | ----------------------------------------------------- |

|

||||

|  |  |

|

||||

|  |  |

|

||||

|

||||

## Development

|

||||

|

||||

|

||||

20

SECURITY.md

20

SECURITY.md

@ -18,10 +18,10 @@ Even if the issue is not a CVE, we still greatly appreciate your help in hardeni

|

||||

|

||||

(.x being the latest patch release for each version)

|

||||

|

||||

| Version | Supported |

|

||||

| -------- | --------- |

|

||||

| 2024.4.x | ✅ |

|

||||

| 2024.6.x | ✅ |

|

||||

| Version | Supported |

|

||||

| --- | --- |

|

||||

| 2023.6.x | ✅ |

|

||||

| 2023.8.x | ✅ |

|

||||

|

||||

## Reporting a Vulnerability

|

||||

|

||||

@ -31,12 +31,12 @@ To report a vulnerability, send an email to [security@goauthentik.io](mailto:se

|

||||

|

||||

authentik reserves the right to reclassify CVSS as necessary. To determine severity, we will use the CVSS calculator from NVD (https://nvd.nist.gov/vuln-metrics/cvss/v3-calculator). The calculated CVSS score will then be translated into one of the following categories:

|

||||

|

||||

| Score | Severity |

|

||||

| ---------- | -------- |

|

||||

| 0.0 | None |

|

||||

| 0.1 – 3.9 | Low |

|

||||

| 4.0 – 6.9 | Medium |

|

||||

| 7.0 – 8.9 | High |

|

||||

| Score | Severity |

|

||||

| --- | --- |

|

||||

| 0.0 | None |

|

||||

| 0.1 – 3.9 | Low |

|

||||

| 4.0 – 6.9 | Medium |

|

||||

| 7.0 – 8.9 | High |

|

||||

| 9.0 – 10.0 | Critical |

|

||||

|

||||

## Disclosure process

|

||||

|

||||

@ -1,12 +1,13 @@

|

||||

"""authentik root module"""

|

||||

|

||||

from os import environ

|

||||

from typing import Optional

|

||||

|

||||

__version__ = "2024.6.1"

|

||||

__version__ = "2024.2.1"

|

||||

ENV_GIT_HASH_KEY = "GIT_BUILD_HASH"

|

||||

|

||||

|

||||

def get_build_hash(fallback: str | None = None) -> str:

|

||||

def get_build_hash(fallback: Optional[str] = None) -> str:

|

||||

"""Get build hash"""

|

||||

build_hash = environ.get(ENV_GIT_HASH_KEY, fallback if fallback else "")

|

||||

return fallback if build_hash == "" and fallback else build_hash

|

||||

|

||||

@ -2,21 +2,18 @@

|

||||

|

||||

import platform

|

||||

from datetime import datetime

|

||||

from ssl import OPENSSL_VERSION

|

||||

from sys import version as python_version

|

||||

from typing import TypedDict

|

||||

|

||||

from cryptography.hazmat.backends.openssl.backend import backend

|

||||

from django.utils.timezone import now

|

||||

from drf_spectacular.utils import extend_schema

|

||||

from gunicorn import version_info as gunicorn_version

|

||||

from rest_framework.fields import SerializerMethodField

|

||||

from rest_framework.request import Request

|

||||

from rest_framework.response import Response

|

||||

from rest_framework.views import APIView

|

||||

|

||||

from authentik import get_full_version

|

||||

from authentik.core.api.utils import PassiveSerializer

|

||||

from authentik.enterprise.license import LicenseKey

|

||||

from authentik.lib.config import CONFIG

|

||||

from authentik.lib.utils.reflection import get_env

|

||||

from authentik.outposts.apps import MANAGED_OUTPOST

|

||||

@ -28,13 +25,11 @@ class RuntimeDict(TypedDict):

|

||||

"""Runtime information"""

|

||||

|

||||

python_version: str

|

||||

gunicorn_version: str

|

||||

environment: str

|

||||

architecture: str

|

||||

platform: str

|

||||

uname: str

|

||||

openssl_version: str

|

||||

openssl_fips_enabled: bool | None

|

||||

authentik_version: str

|

||||

|

||||

|

||||

class SystemInfoSerializer(PassiveSerializer):

|

||||

@ -69,15 +64,11 @@ class SystemInfoSerializer(PassiveSerializer):

|

||||

def get_runtime(self, request: Request) -> RuntimeDict:

|

||||

"""Get versions"""

|

||||

return {

|

||||

"architecture": platform.machine(),

|

||||

"authentik_version": get_full_version(),

|

||||

"environment": get_env(),

|

||||

"openssl_fips_enabled": (

|

||||

backend._fips_enabled if LicenseKey.get_total().is_valid() else None

|

||||

),

|

||||

"openssl_version": OPENSSL_VERSION,

|

||||

"platform": platform.platform(),

|

||||

"python_version": python_version,

|

||||

"gunicorn_version": ".".join(str(x) for x in gunicorn_version),

|

||||

"environment": get_env(),

|

||||

"architecture": platform.machine(),

|

||||

"platform": platform.platform(),

|

||||

"uname": " ".join(platform.uname()),

|

||||

}

|

||||

|

||||

|

||||

@ -10,7 +10,7 @@ from rest_framework.response import Response

|

||||

from rest_framework.views import APIView

|

||||

|

||||

from authentik import __version__, get_build_hash

|

||||

from authentik.admin.tasks import VERSION_CACHE_KEY, VERSION_NULL, update_latest_version

|

||||

from authentik.admin.tasks import VERSION_CACHE_KEY, update_latest_version

|

||||

from authentik.core.api.utils import PassiveSerializer

|

||||

|

||||

|

||||

@ -19,7 +19,6 @@ class VersionSerializer(PassiveSerializer):

|

||||

|

||||

version_current = SerializerMethodField()

|

||||

version_latest = SerializerMethodField()

|

||||

version_latest_valid = SerializerMethodField()

|

||||

build_hash = SerializerMethodField()

|

||||

outdated = SerializerMethodField()

|

||||

|

||||

@ -39,10 +38,6 @@ class VersionSerializer(PassiveSerializer):

|

||||

return __version__

|

||||

return version_in_cache

|

||||

|

||||

def get_version_latest_valid(self, _) -> bool:

|

||||

"""Check if latest version is valid"""

|

||||

return cache.get(VERSION_CACHE_KEY) != VERSION_NULL

|

||||

|

||||

def get_outdated(self, instance) -> bool:

|

||||

"""Check if we're running the latest version"""

|

||||

return parse(self.get_version_current(instance)) < parse(self.get_version_latest(instance))

|

||||

|

||||

@ -18,7 +18,6 @@ from authentik.lib.utils.http import get_http_session

|

||||

from authentik.root.celery import CELERY_APP

|

||||

|

||||

LOGGER = get_logger()

|

||||

VERSION_NULL = "0.0.0"

|

||||

VERSION_CACHE_KEY = "authentik_latest_version"

|

||||

VERSION_CACHE_TIMEOUT = 8 * 60 * 60 # 8 hours

|

||||

# Chop of the first ^ because we want to search the entire string

|

||||

@ -56,7 +55,7 @@ def clear_update_notifications():

|

||||

def update_latest_version(self: SystemTask):

|

||||

"""Update latest version info"""

|

||||

if CONFIG.get_bool("disable_update_check"):

|

||||

cache.set(VERSION_CACHE_KEY, VERSION_NULL, VERSION_CACHE_TIMEOUT)

|

||||

cache.set(VERSION_CACHE_KEY, "0.0.0", VERSION_CACHE_TIMEOUT)

|

||||

self.set_status(TaskStatus.WARNING, "Version check disabled.")

|

||||

return

|

||||

try:

|

||||

@ -83,7 +82,7 @@ def update_latest_version(self: SystemTask):

|

||||

event_dict["message"] = f"Changelog: {match.group()}"

|

||||

Event.new(EventAction.UPDATE_AVAILABLE, **event_dict).save()

|

||||

except (RequestException, IndexError) as exc:

|

||||

cache.set(VERSION_CACHE_KEY, VERSION_NULL, VERSION_CACHE_TIMEOUT)

|

||||

cache.set(VERSION_CACHE_KEY, "0.0.0", VERSION_CACHE_TIMEOUT)

|

||||

self.set_error(exc)

|

||||

|

||||

|

||||

|

||||

@ -10,3 +10,26 @@ class AuthentikAPIConfig(AppConfig):

|

||||

label = "authentik_api"

|

||||

mountpoint = "api/"

|

||||

verbose_name = "authentik API"

|

||||

|

||||

def ready(self) -> None:

|

||||

from drf_spectacular.extensions import OpenApiAuthenticationExtension

|

||||

|

||||

from authentik.api.authentication import TokenAuthentication

|

||||

|

||||

# Class is defined here as it needs to be created early enough that drf-spectacular will

|

||||

# find it, but also won't cause any import issues

|

||||

# pylint: disable=unused-variable

|

||||

class TokenSchema(OpenApiAuthenticationExtension):

|

||||

"""Auth schema"""

|

||||

|

||||

target_class = TokenAuthentication

|

||||

name = "authentik"

|

||||

|

||||

def get_security_definition(self, auto_schema):

|

||||

"""Auth schema"""

|

||||

return {

|

||||

"type": "apiKey",

|

||||

"in": "header",

|

||||

"name": "Authorization",

|

||||

"scheme": "bearer",

|

||||

}

|

||||

|

||||

@ -1,10 +1,9 @@

|

||||

"""API Authentication"""

|

||||

|

||||

from hmac import compare_digest

|

||||

from typing import Any

|

||||

from typing import Any, Optional

|

||||

|

||||

from django.conf import settings

|

||||

from drf_spectacular.extensions import OpenApiAuthenticationExtension

|

||||

from rest_framework.authentication import BaseAuthentication, get_authorization_header

|

||||

from rest_framework.exceptions import AuthenticationFailed

|

||||

from rest_framework.request import Request

|

||||

@ -18,7 +17,7 @@ from authentik.providers.oauth2.constants import SCOPE_AUTHENTIK_API

|

||||

LOGGER = get_logger()

|

||||

|

||||

|

||||

def validate_auth(header: bytes) -> str | None:

|

||||

def validate_auth(header: bytes) -> Optional[str]:

|

||||

"""Validate that the header is in a correct format,

|

||||

returns type and credentials"""

|

||||

auth_credentials = header.decode().strip()

|

||||

@ -33,7 +32,7 @@ def validate_auth(header: bytes) -> str | None:

|

||||

return auth_credentials

|

||||

|

||||

|

||||

def bearer_auth(raw_header: bytes) -> User | None:

|

||||

def bearer_auth(raw_header: bytes) -> Optional[User]:

|

||||

"""raw_header in the Format of `Bearer ....`"""

|

||||

user = auth_user_lookup(raw_header)

|

||||

if not user:

|

||||

@ -43,7 +42,7 @@ def bearer_auth(raw_header: bytes) -> User | None:

|

||||

return user

|

||||

|

||||

|

||||

def auth_user_lookup(raw_header: bytes) -> User | None:

|

||||

def auth_user_lookup(raw_header: bytes) -> Optional[User]:

|

||||